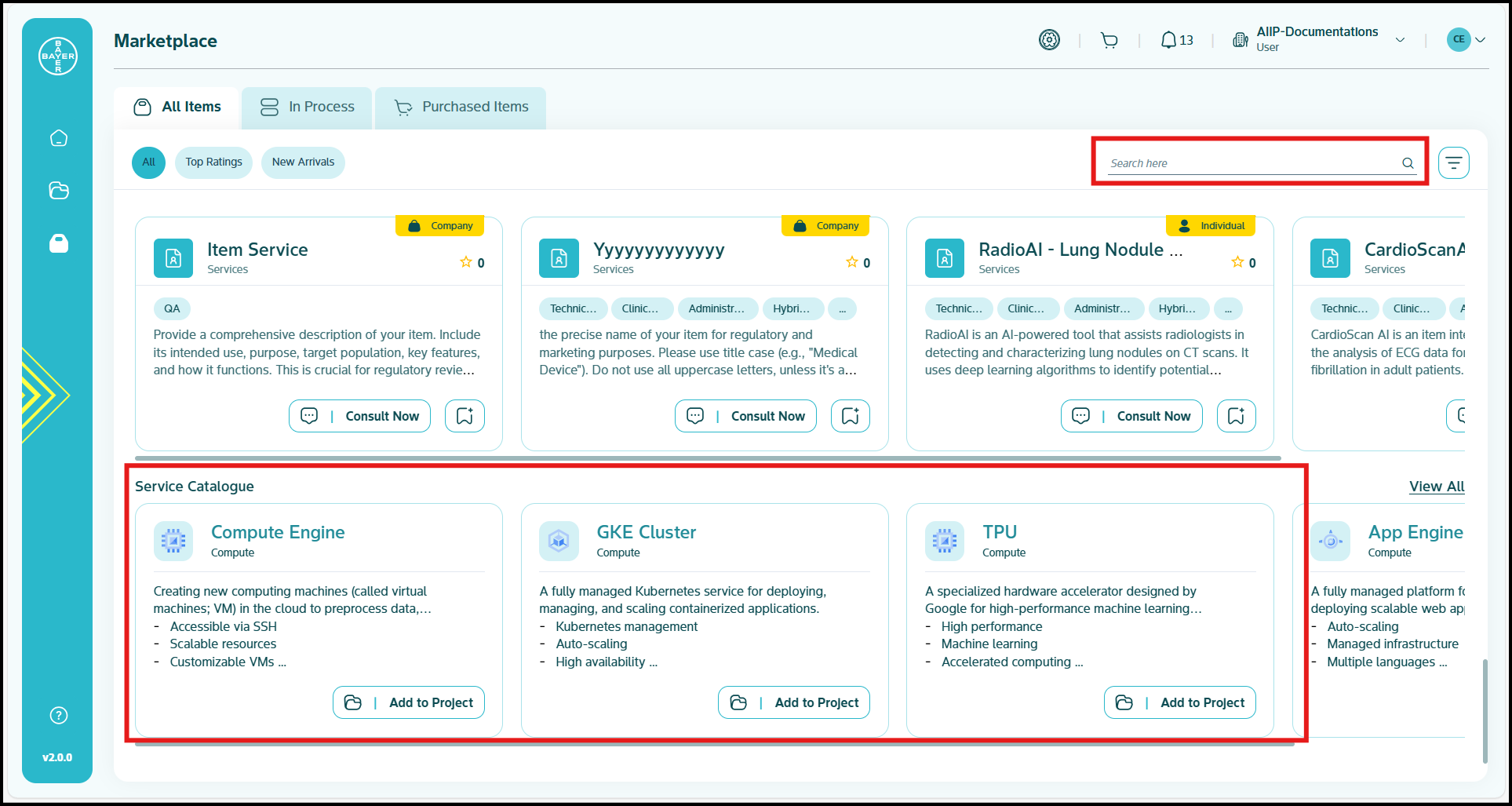

Service Catalogue

To ease the process of Google Cloud Platform resources setup for users, we have service catalogues available on our AI Innovation Platform where users can easily add Google Cloud Platform services to their projects/workspaces from the platform.

To share an example, let's assume users want to provision a GPU instance hosting Jupyterlab service which can be shared among multiple users and have separate working spaces to maintain development environments. To achieve this from GCP console, a user has to:

- Host the Jupyter service

- Attach it behind a load balancer and

- Secure it using an authentication method

This can be done hassle free using our service catalogue functionality with just a few clicks. It will not only take care of the authentication and Jupyterlab service setup but also take care of other security & cloud best practices like adding tags, labels, no external IPs, etc.

The user has the flexibility to update/change the settings of the instances from the Google Cloud Platform console later on, as they now have access to the Google Cloud Platform console as well. For our JupyterLab example, this means users can, for instance, scale up or down the GPU resources, adjust the instance's machine type (e.g., CPU and memory), modify storage capacity, or update network configurations directly within the GCP console.

Service catalogue is an add-on functionality and not a compulsion of our platform. It is a recommended feature for hosting the basic setup required with respect to security and cloud best practices and thereby modified according to user needs. Users can still however add services from Google Cloud Platform console directly onto their projects, if they prefer.

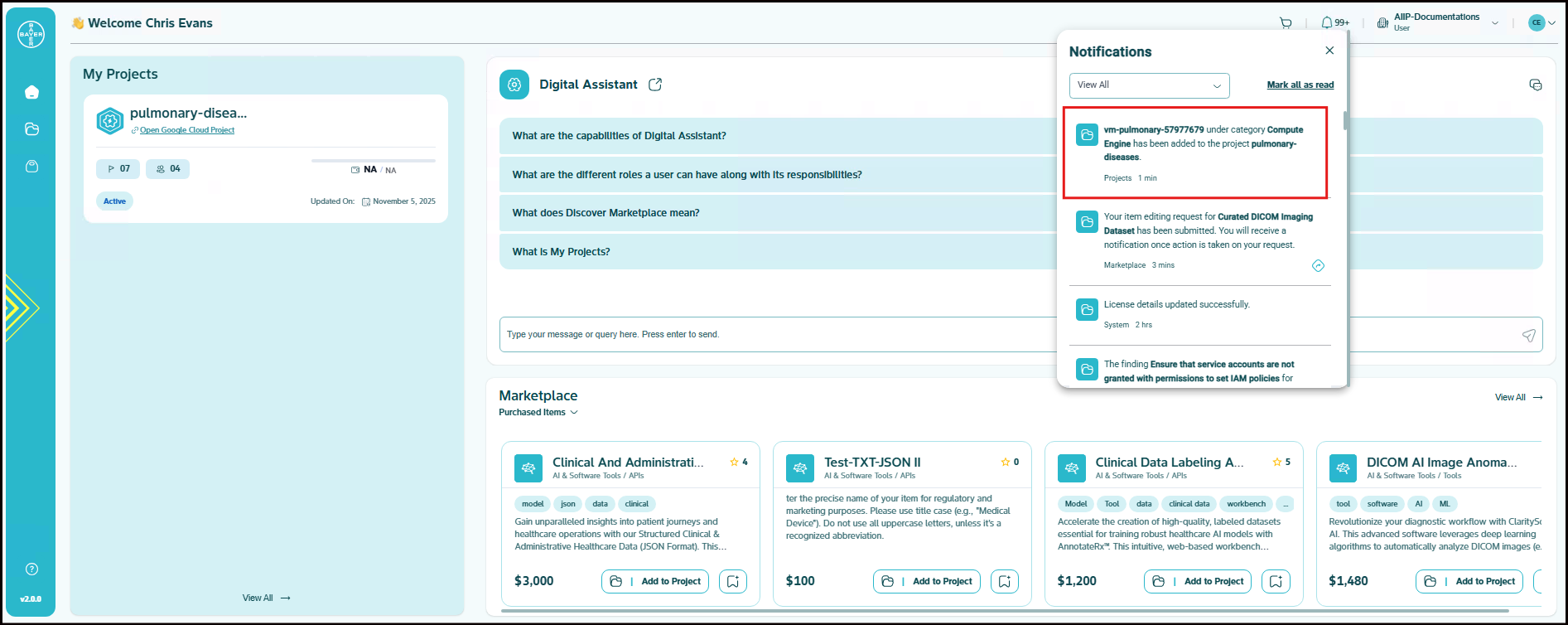

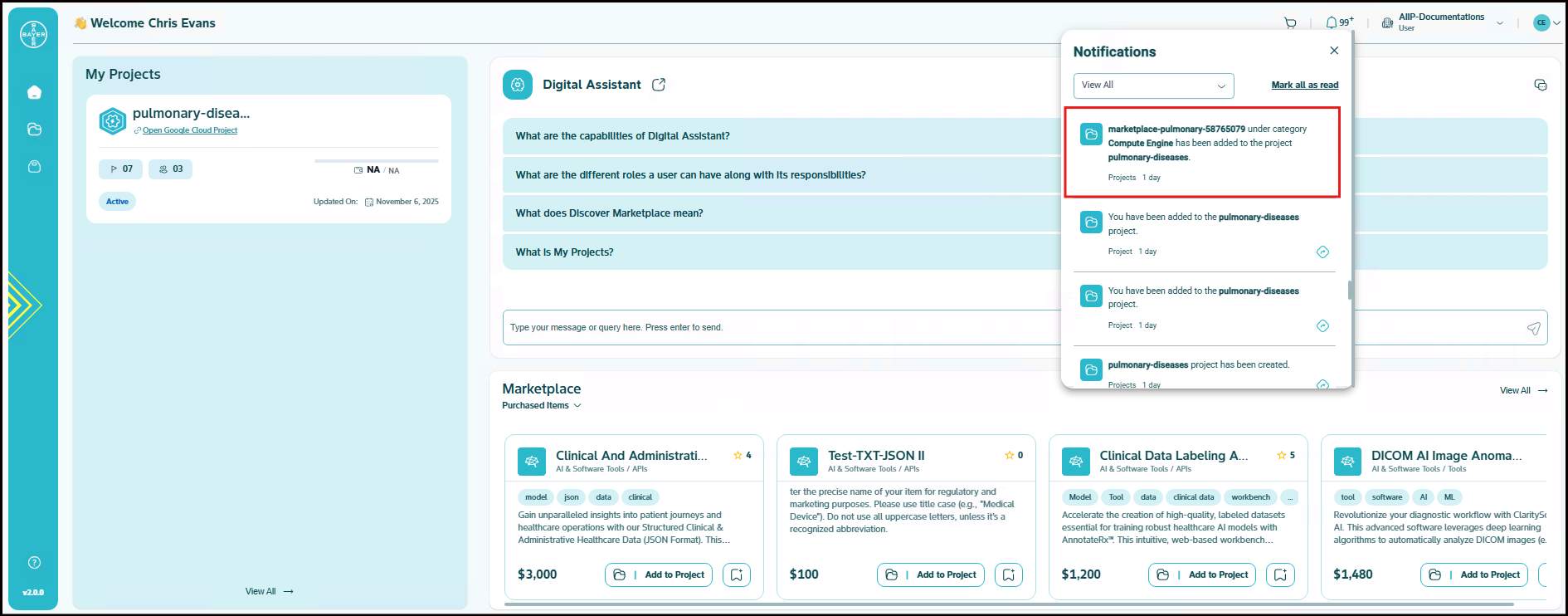

The services will be added directly and users will receive the notification on the status of service addition request.

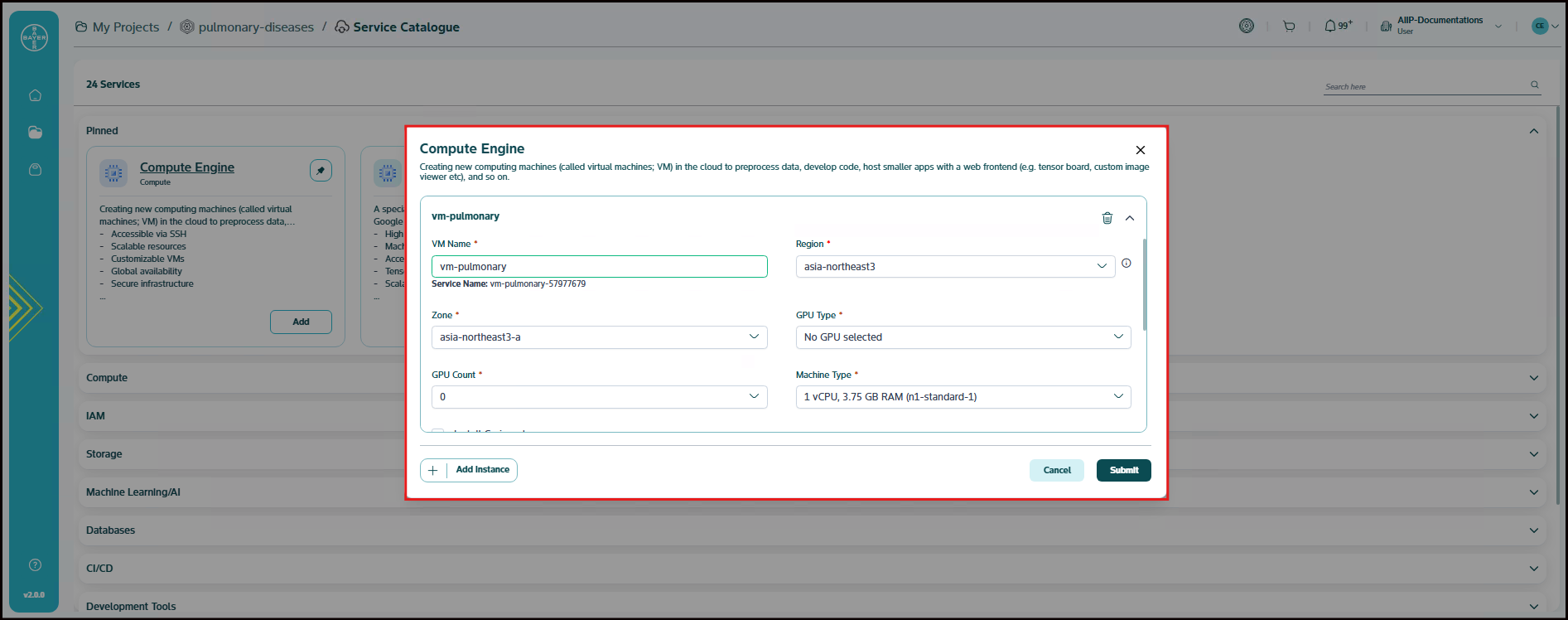

Provision Services using Service Catalogue

The provision to spin up new resources is provided on the AI Innovation Platform itself, let us talk about how this can be done.

Below are the steps to be followed to add services:

-

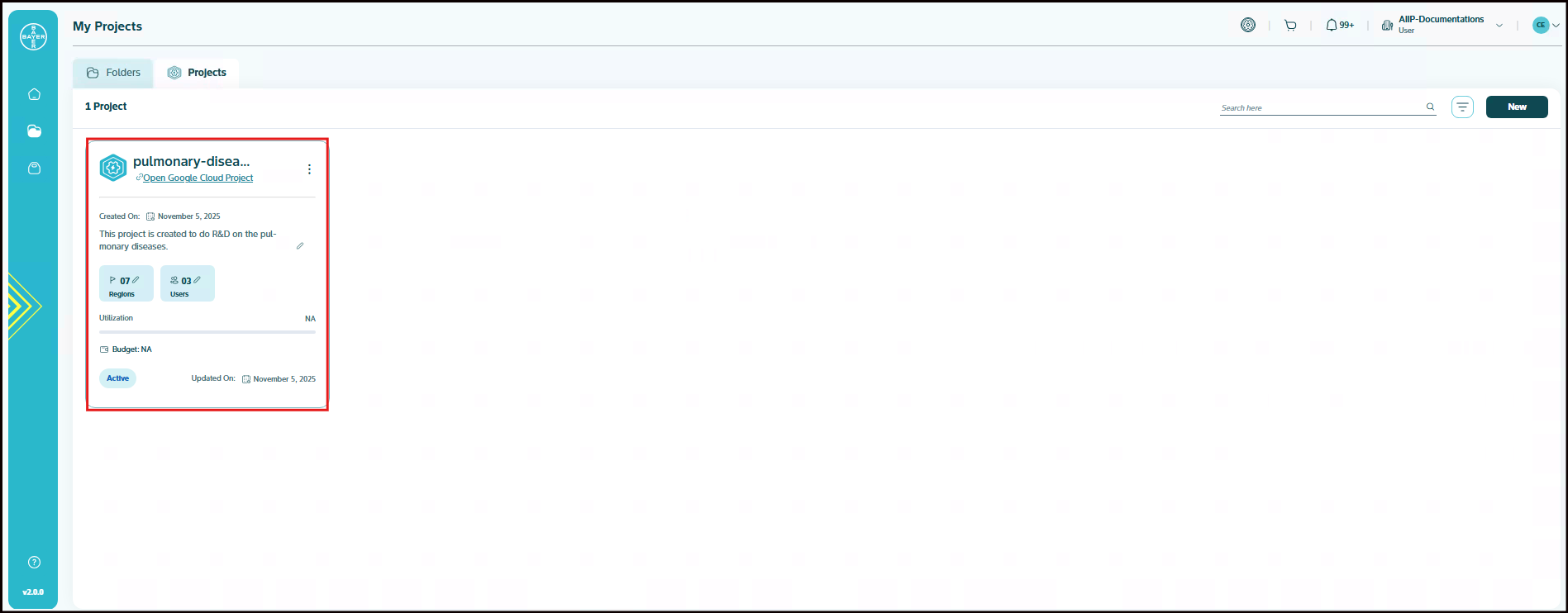

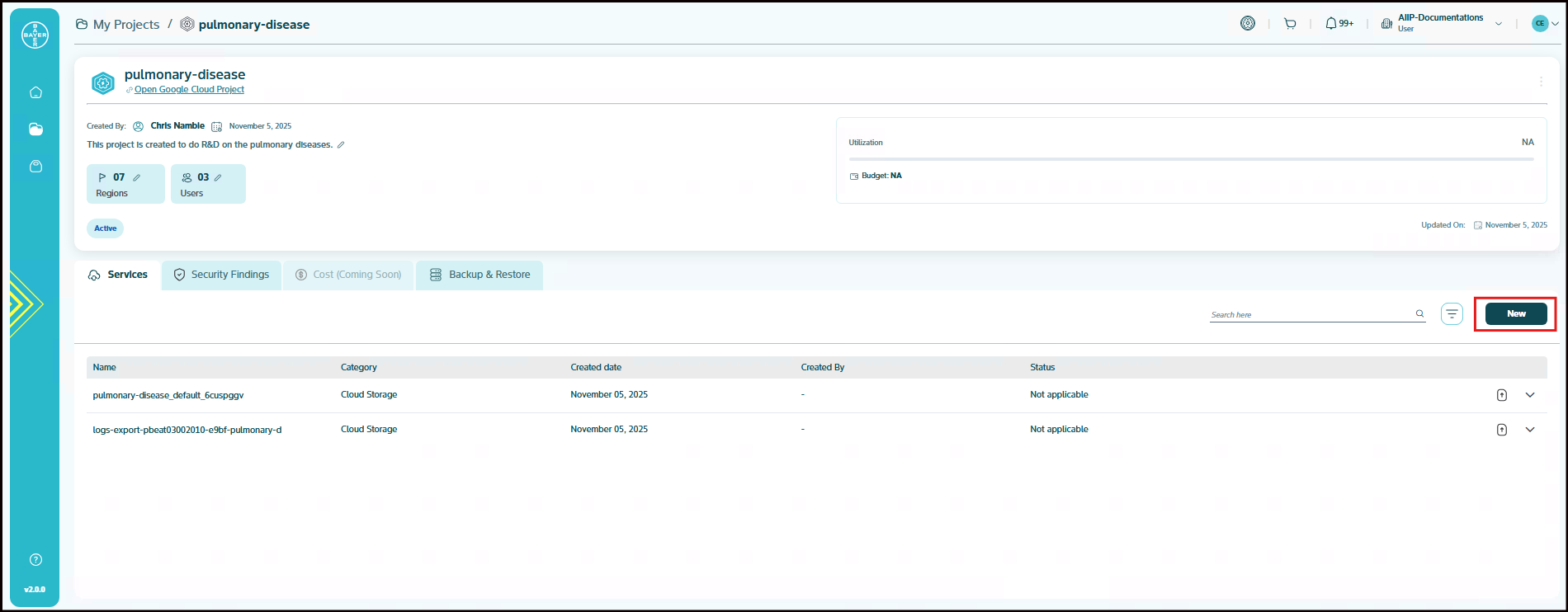

Go to My Projects and click on the project for which the services need to be added.

-

In the project details page, click on "New" under Services tab, to provision the services.

-

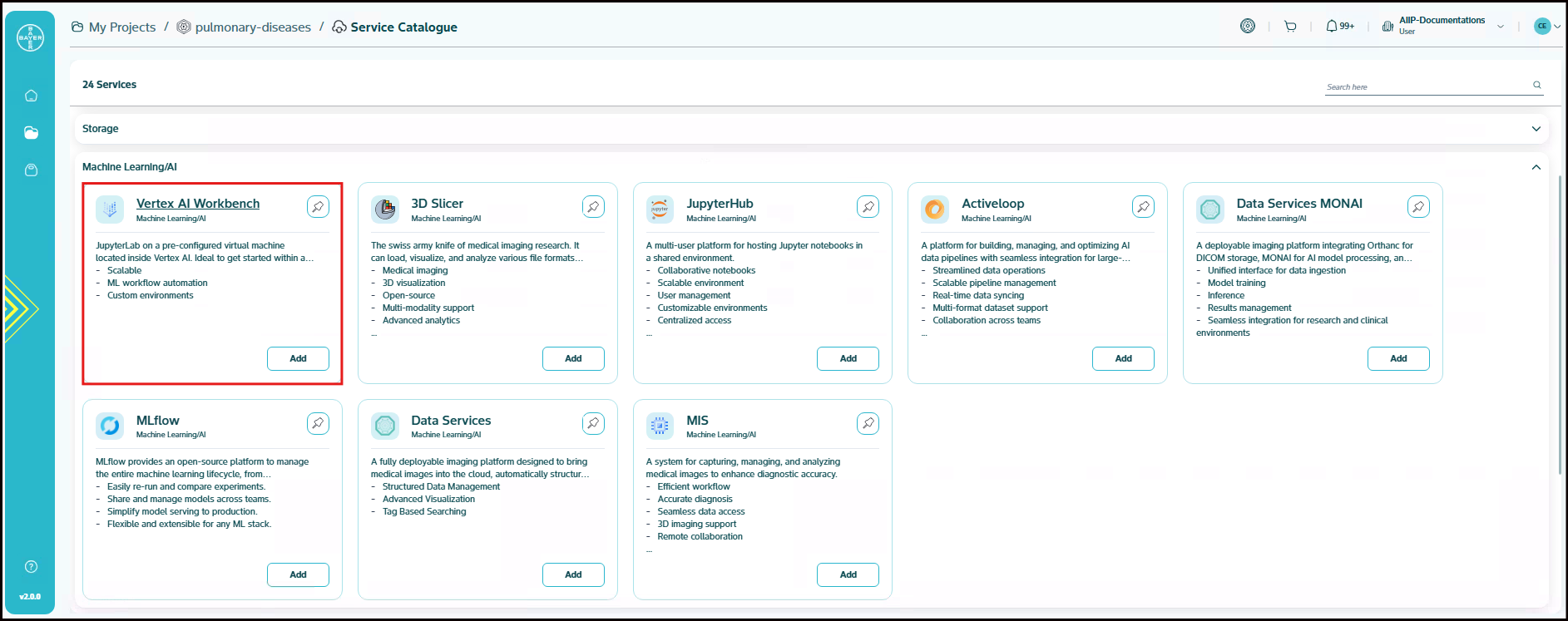

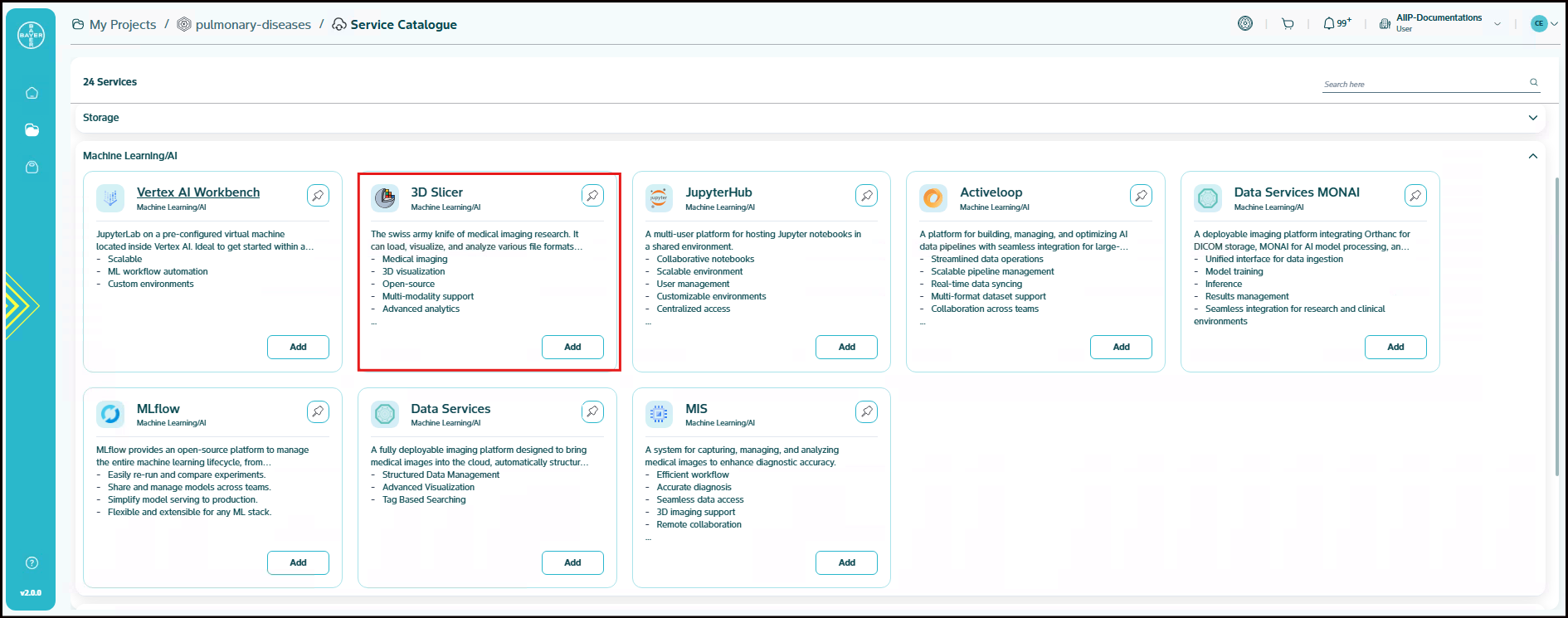

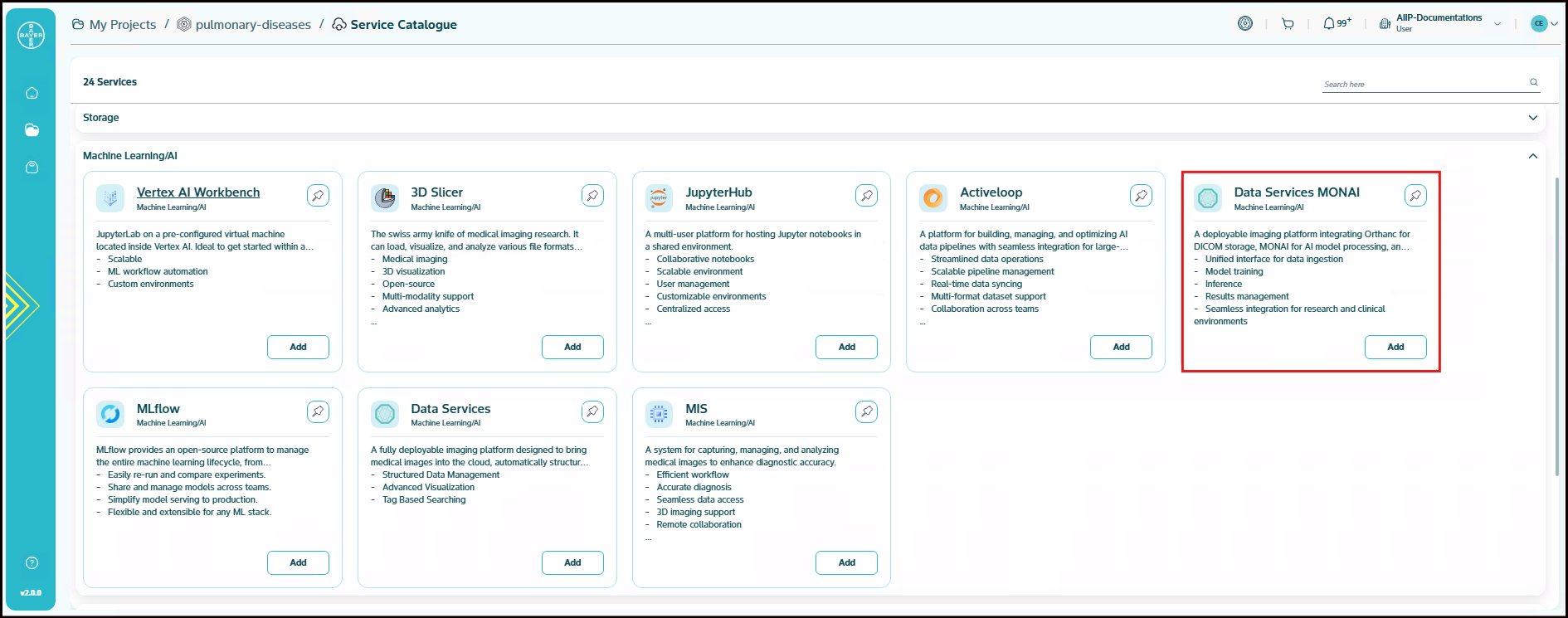

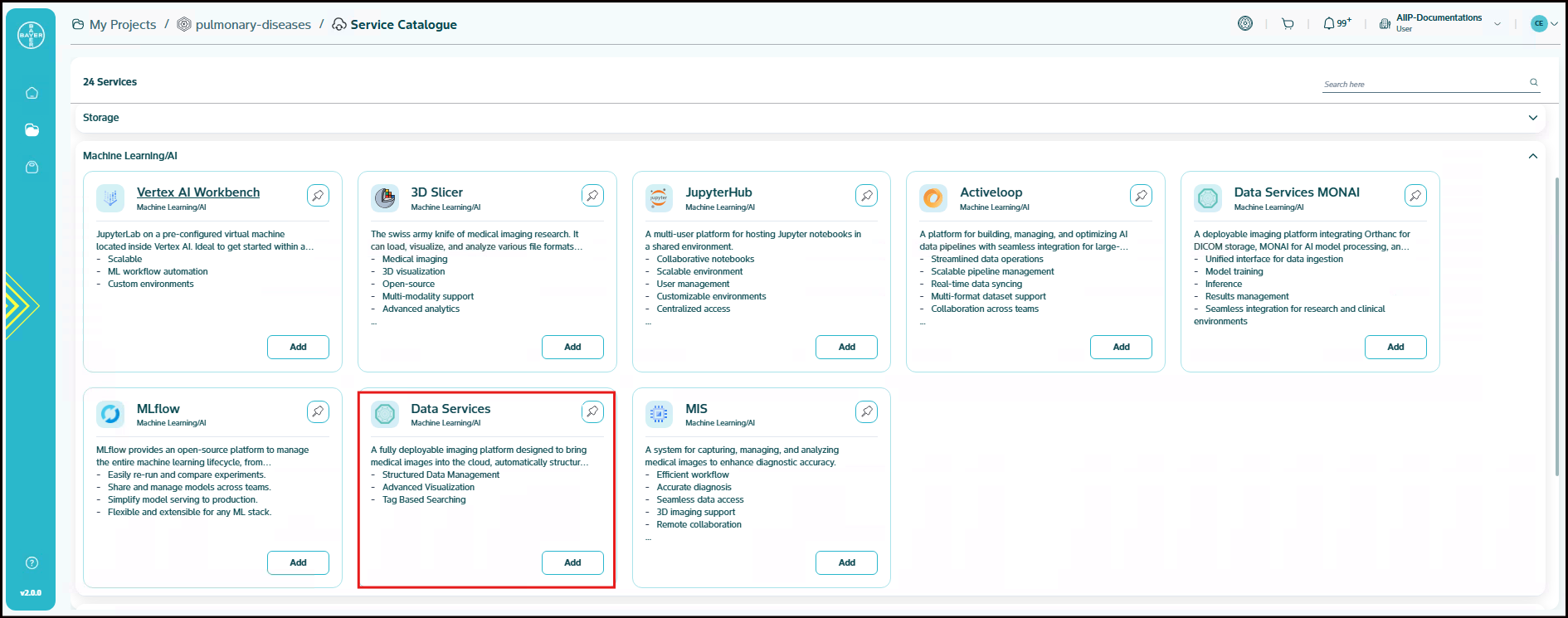

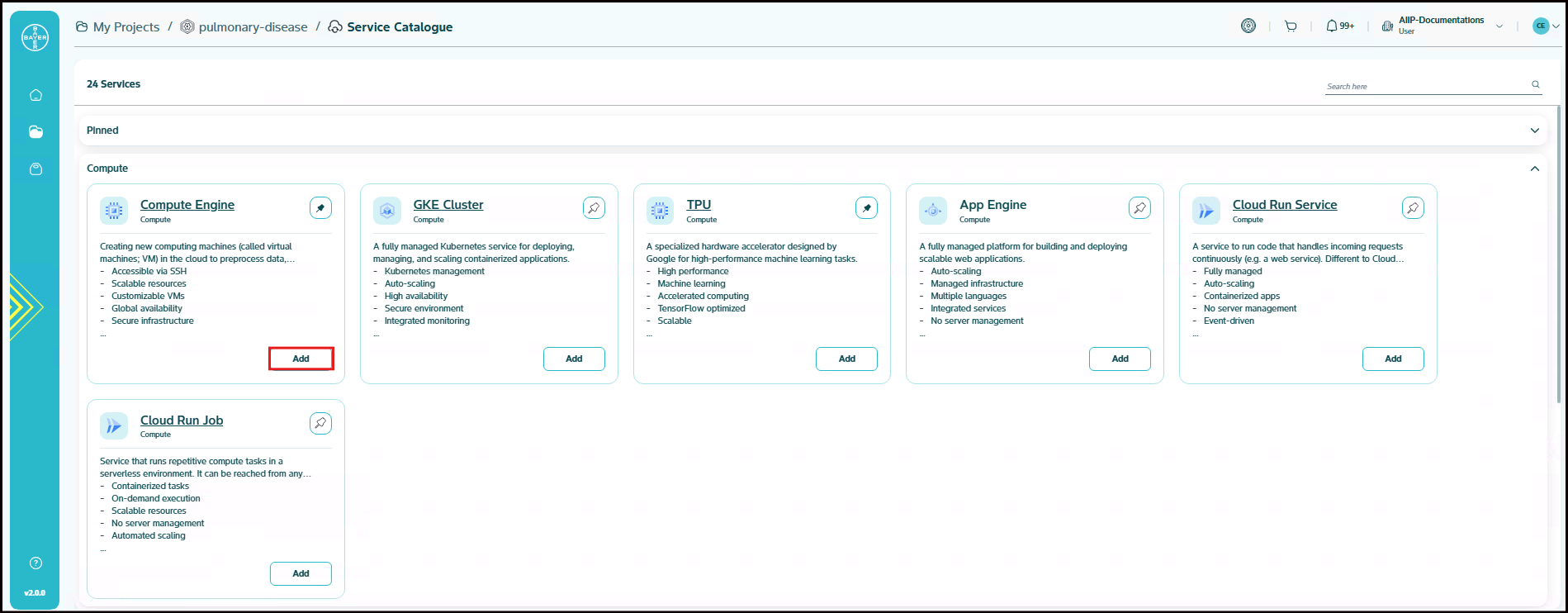

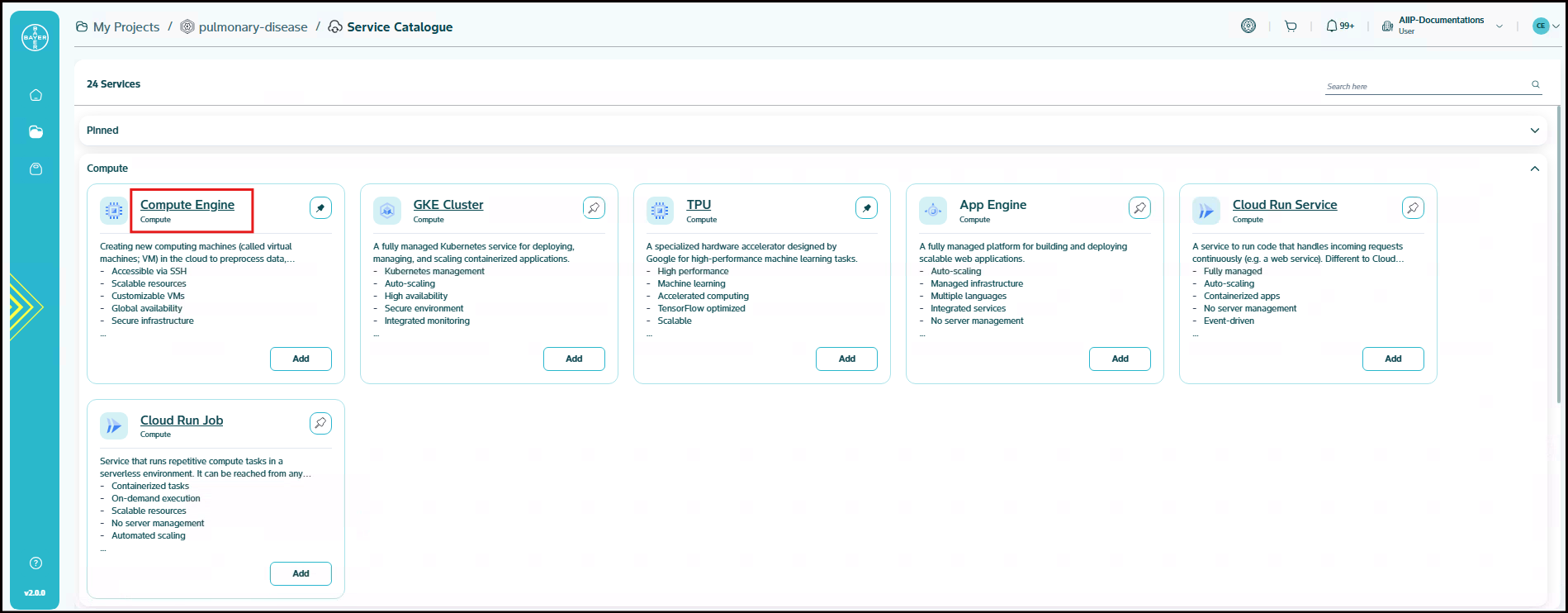

Select the service and click on "Add" to choose the configurations. Scroll through and choose from our wide range of service categories and pick the service that best suits your needs.

-

You will be notified once the service is added to your project. This may take a few minutes.

-

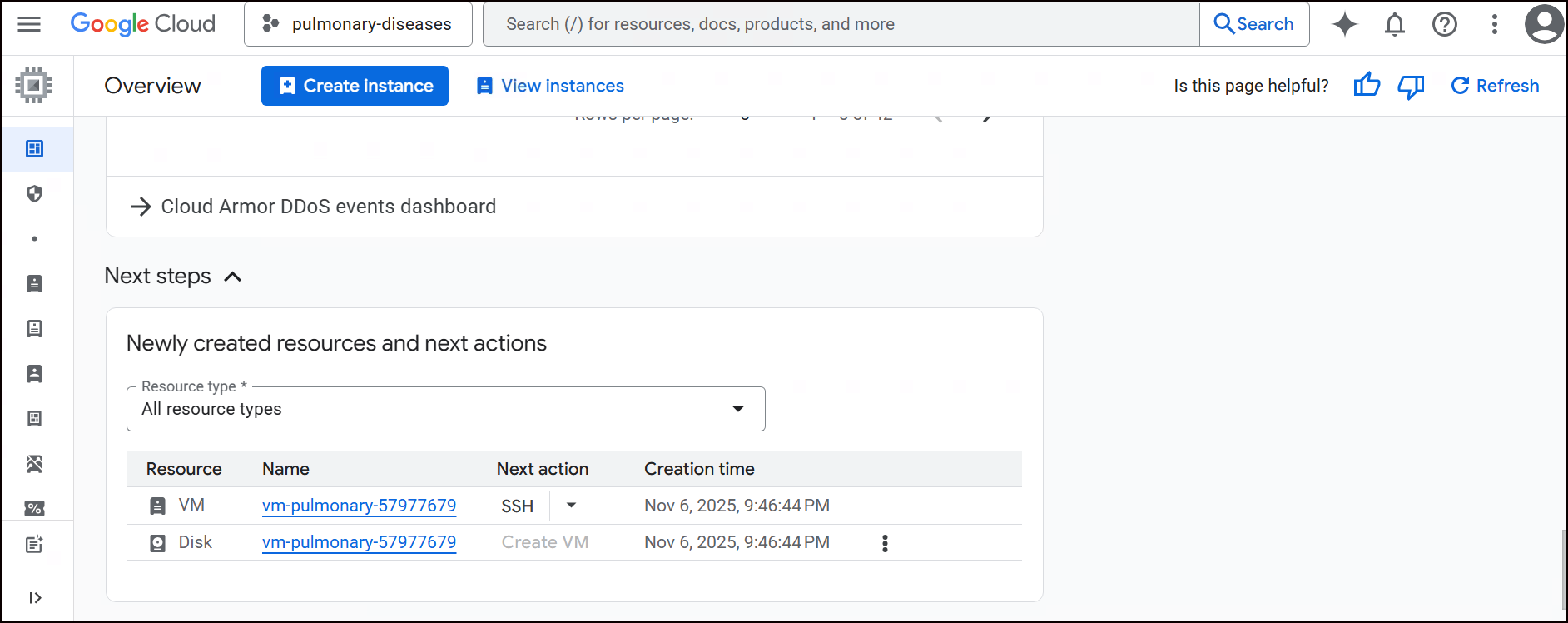

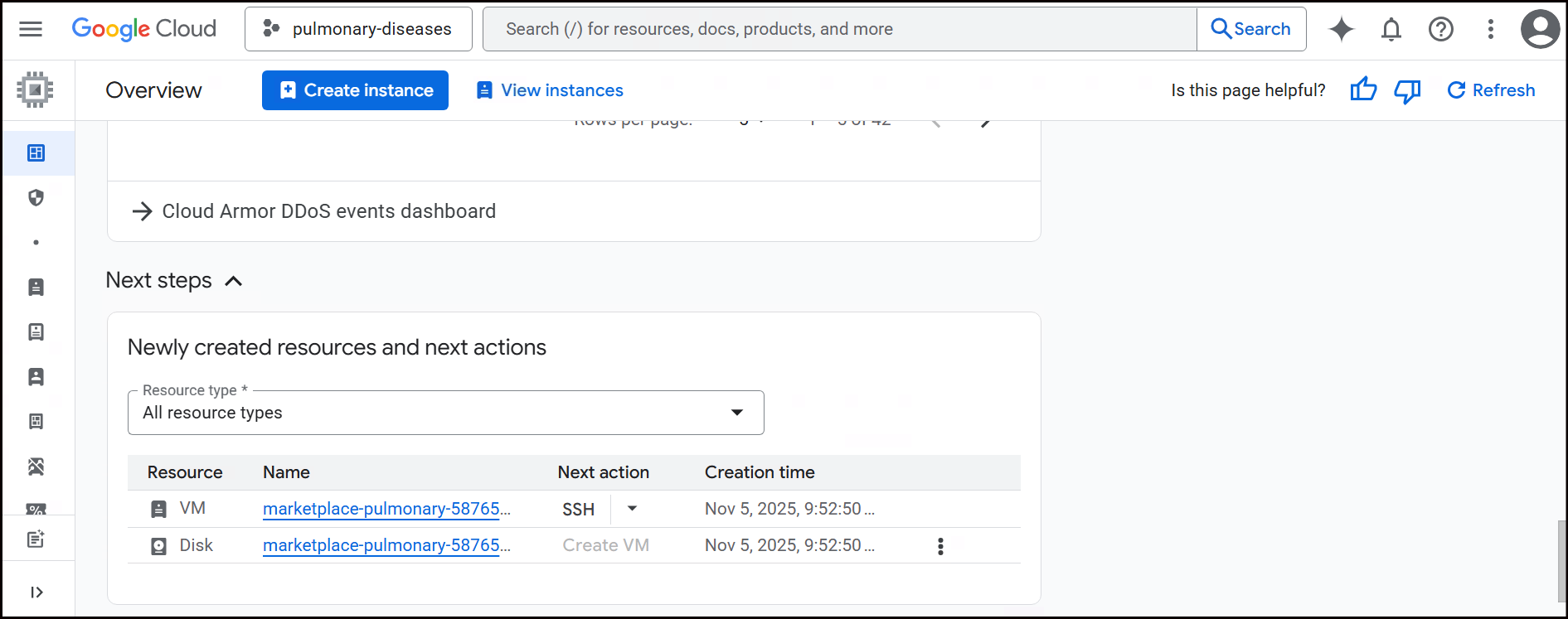

Once provisioned, a link of the service will be available on the service name itself as a hyperlink. You can click on this and navigate to the Google Cloud Platform console to see this service.

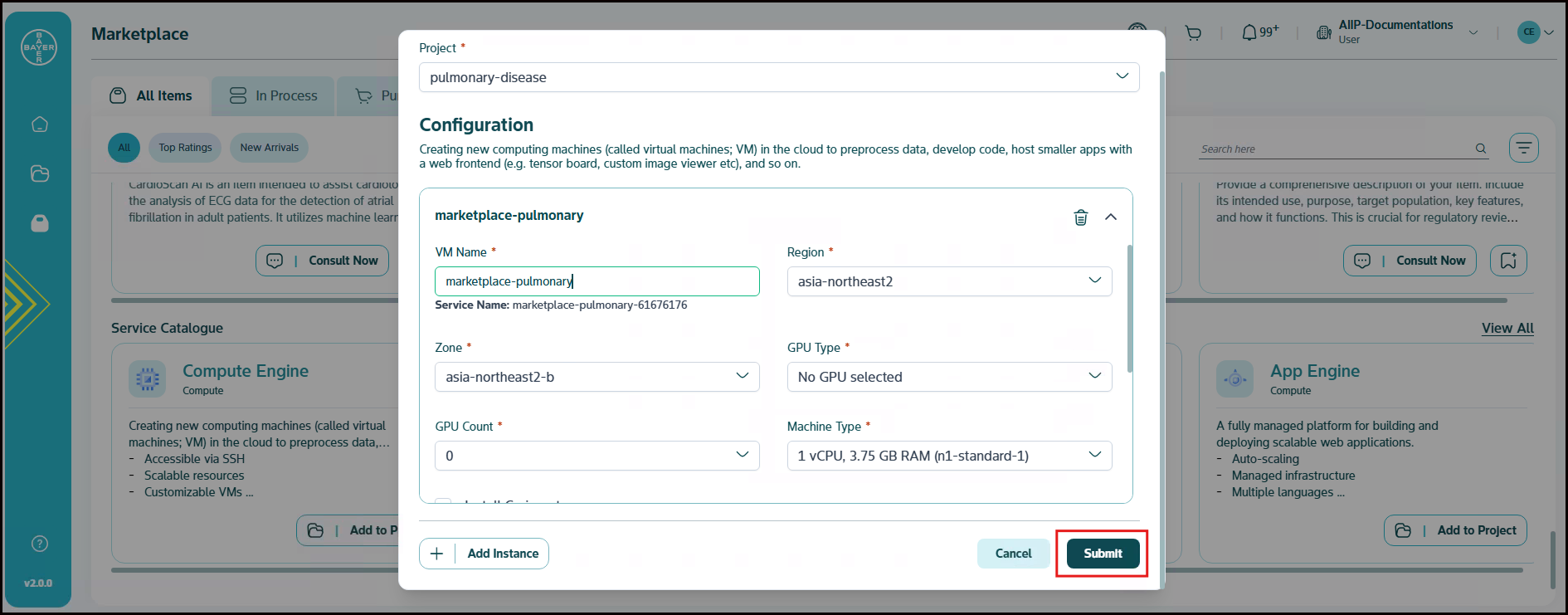

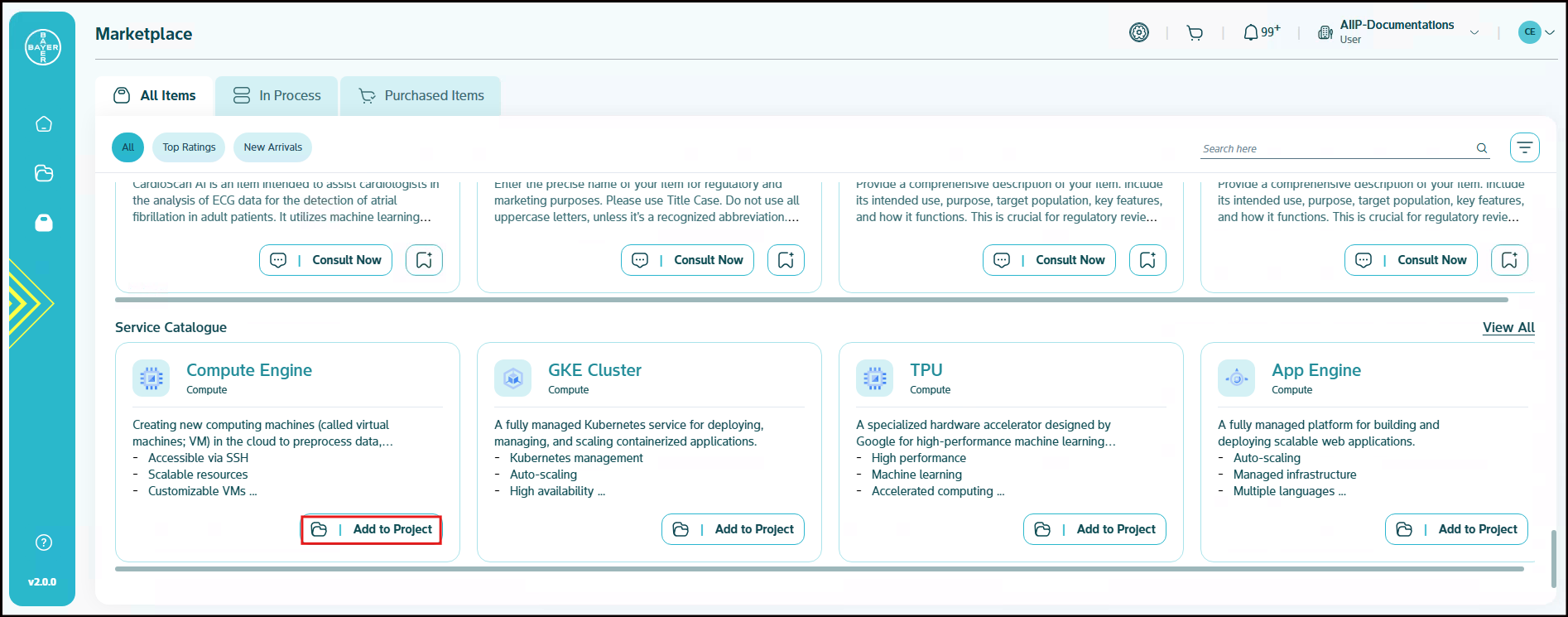

Provision Services from Marketplace

This guide outlines how you can provision a new service using the Marketplace. The Marketplace provides a centralized location for you to discover and deploy various services to your projects.

Below are the steps to be followed to add a service from Marketplace:

-

Go to Marketplace and click on the desired service from the dedicated "Service Catalogue" section under your All Items tab. You can also use the search bar to search for the desired service.

-

Once you have located the desired service, click on "Add to Project" to add the service to any of your existing projects.

-

You will be notified once the service is added to your project. This may take a few minutes.

-

Once provisioned, a link of the service will be available on the service name itself as a hyperlink on the detailed projects page. You can click on this and can navigate to the Google Cloud Platform console to see these service.

Note

Please note that the services can be added at any state of the project (requested, provisioning, etc) but they will be spun up only when the project is in active state. Also, services addition will not require any approvals from admins.

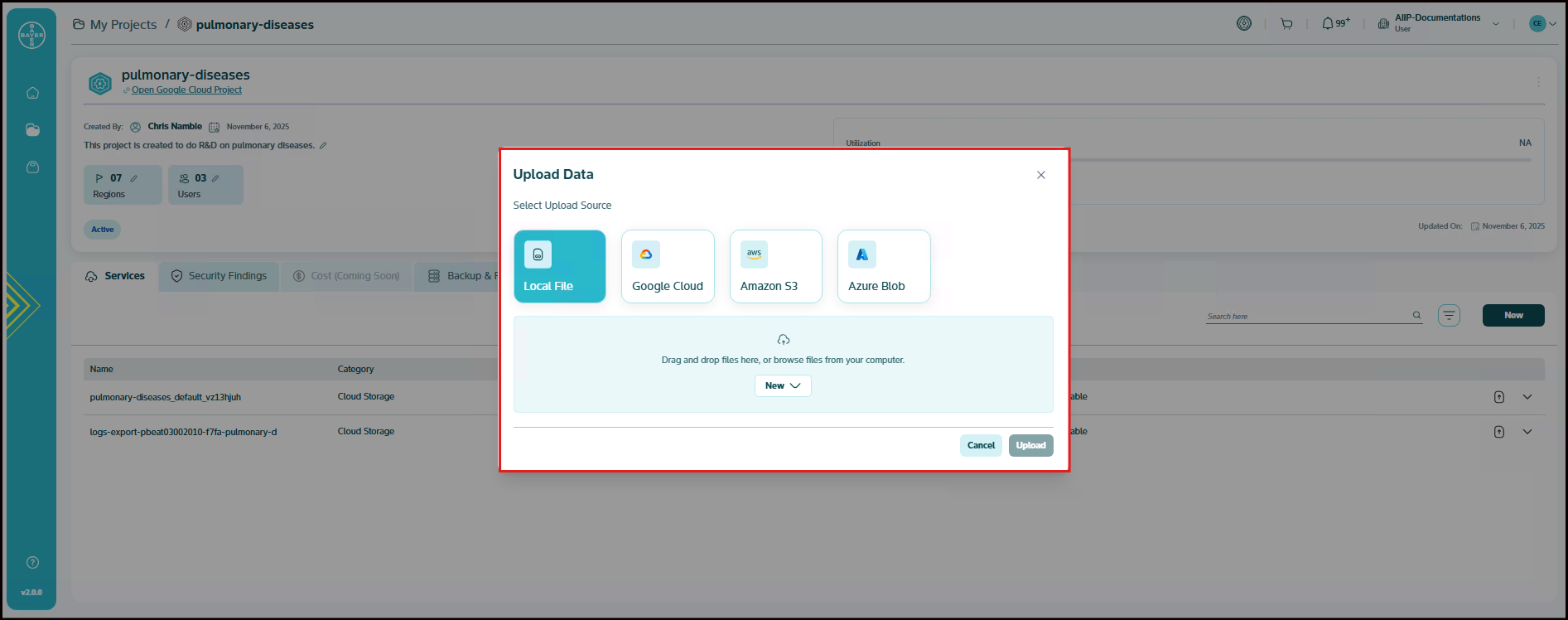

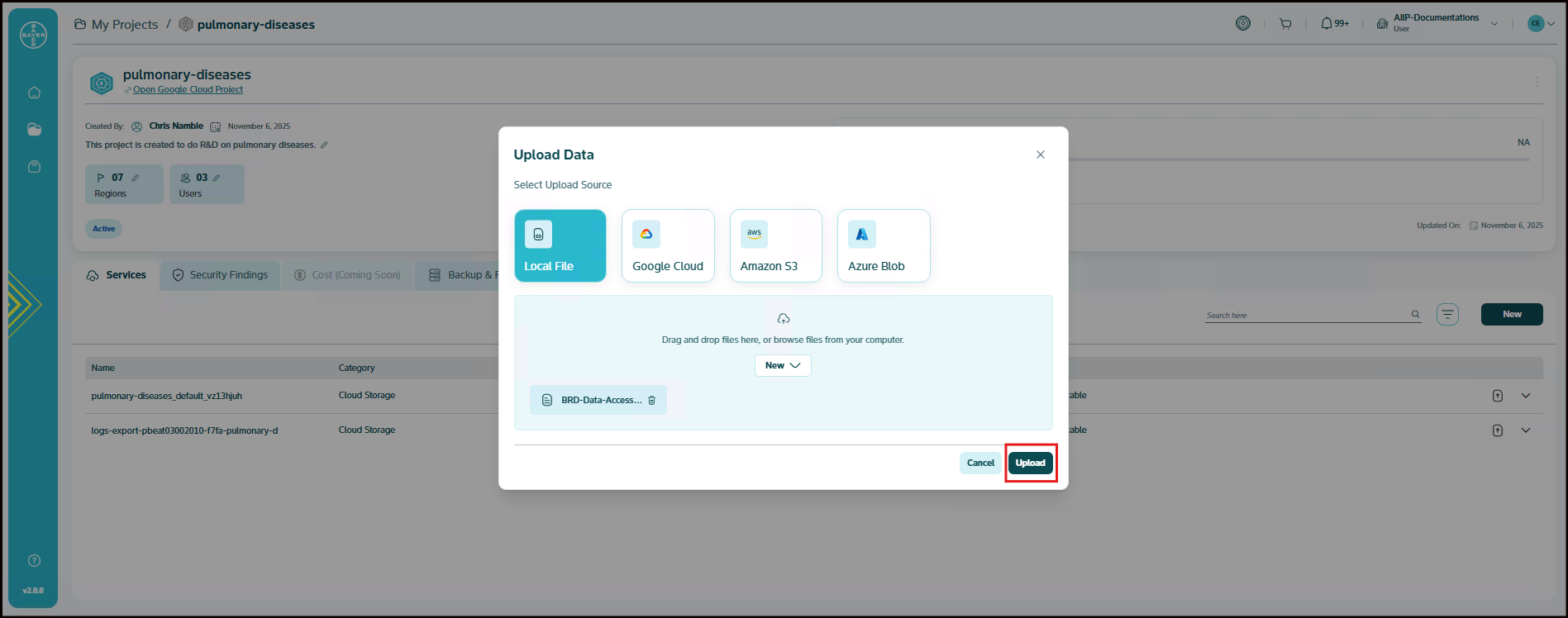

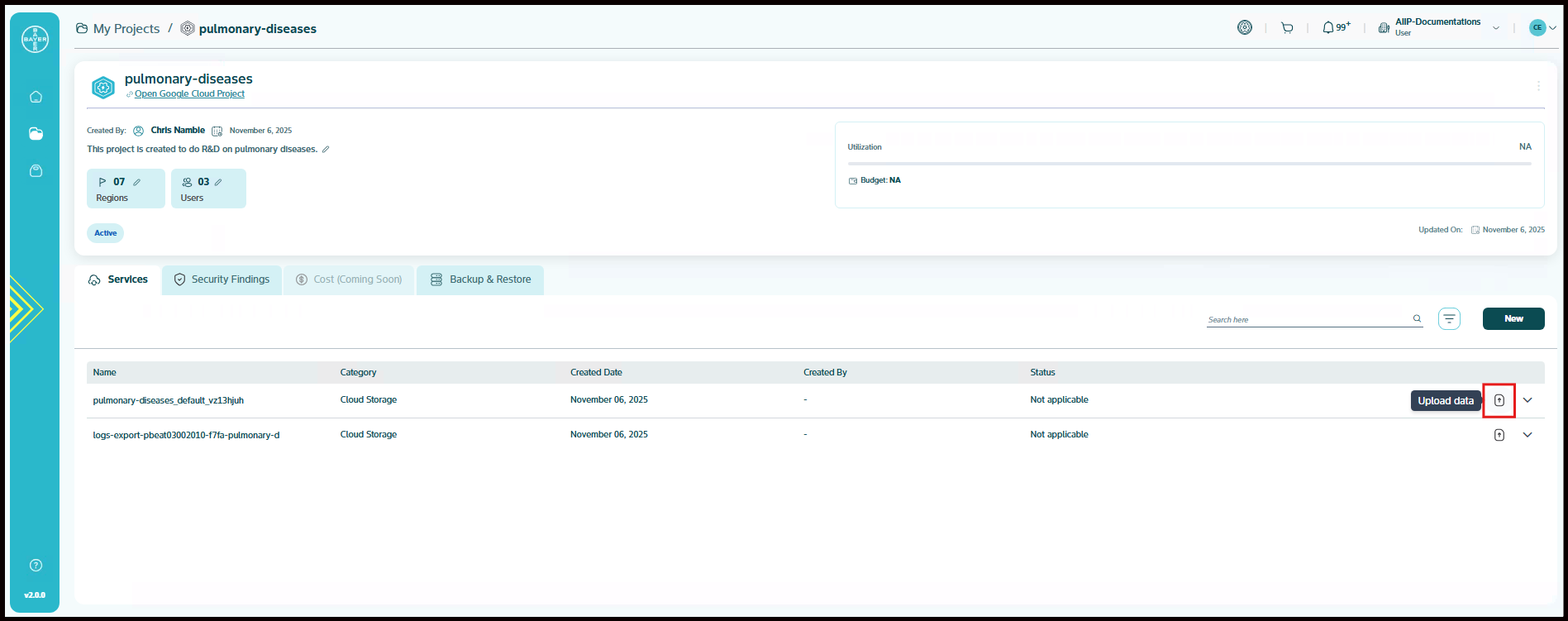

Uploading Data to Your Bucket via the Services Page

This guide explains how you can upload data to your bucket directly from the Services page.

-

In the project details page, click on upload icon next to the Cloud Storage service under Services tab, to upload the data.

-

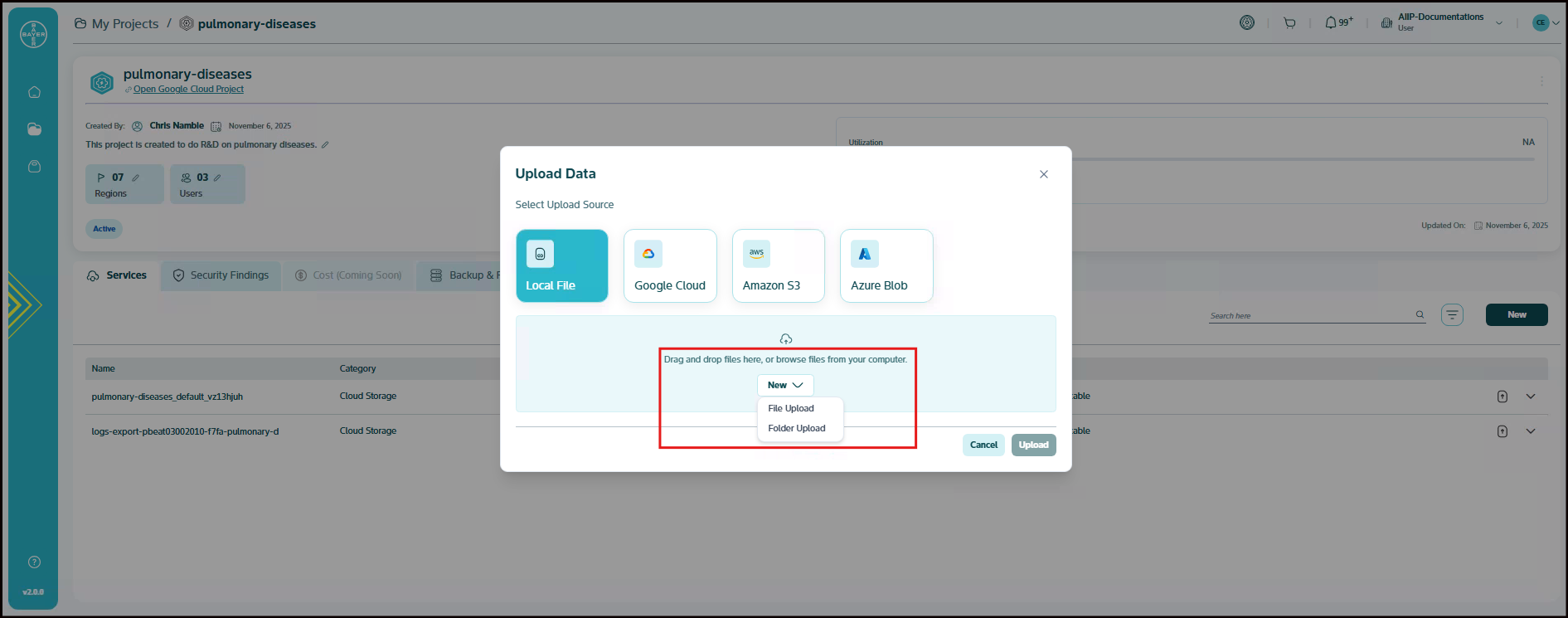

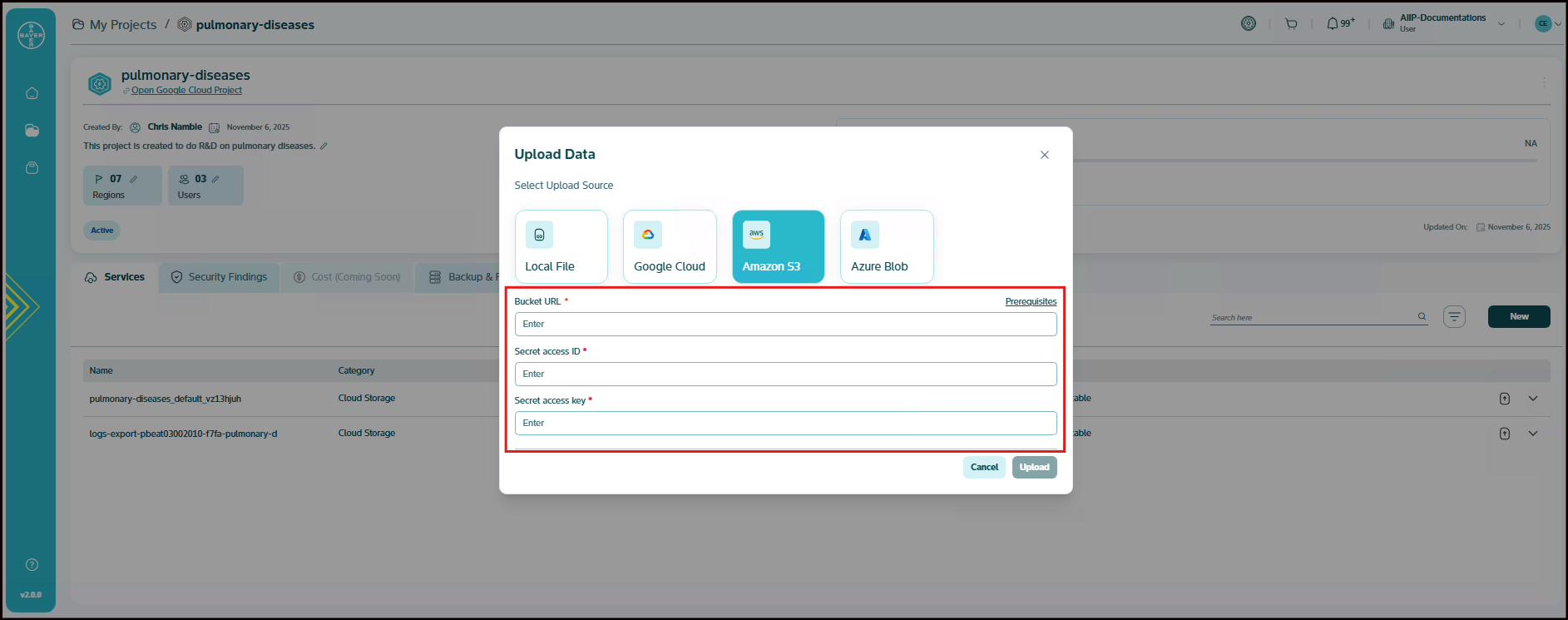

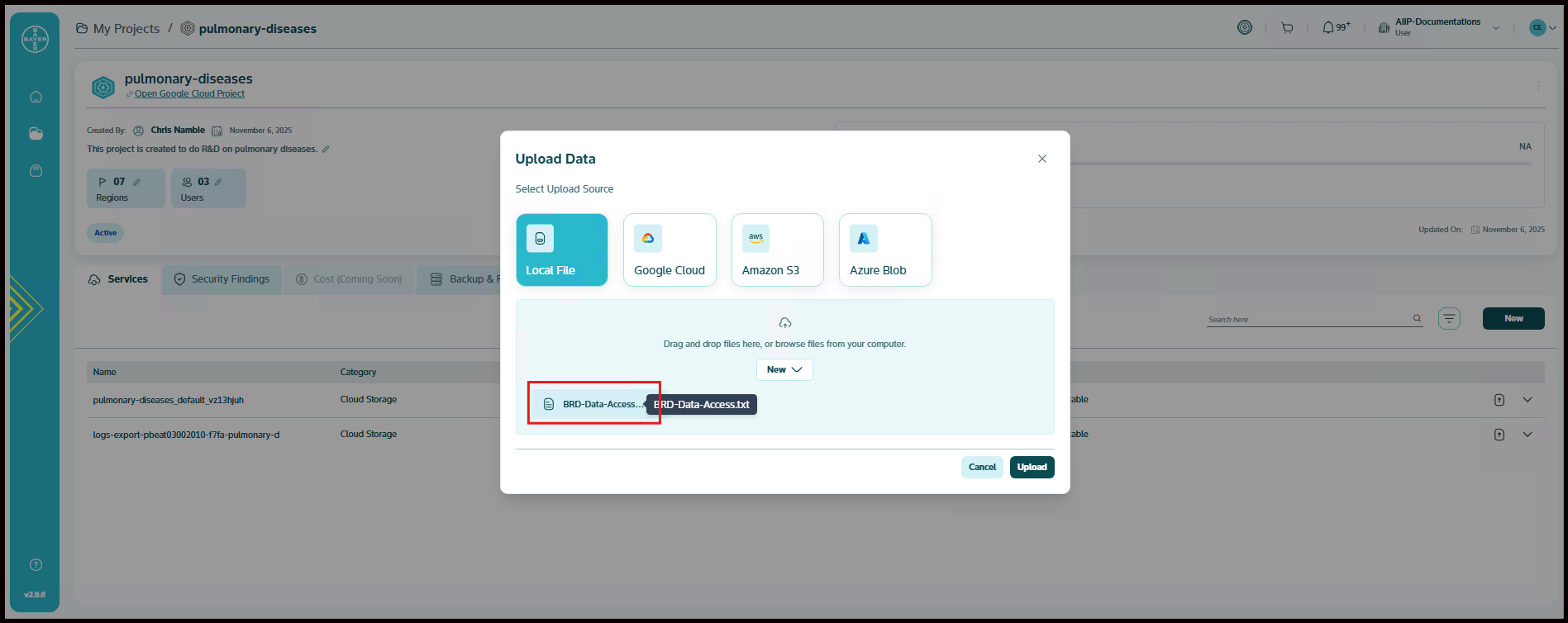

The "Upload Data" pop-up presents several options for your data source. You can choose the option that best suits your needs:

- Local File (Selected by default): You can upload files directly from your computer.

- Google Cloud: You can connect to your Google Cloud storage by entering the Bucket URL.

- Amazon S3: You can connect to your Amazon S3 buckets by entering the Bucket URL, Secret access ID, and Secret access key.

- Azure Blob: You can connect to your Azure Blob storage by entering the Bucket URL, Storage account name, and Shared access signature.

-

Upload Your Data:

-

For Local File Uploads: You can drag and drop your files directly into the designated area within the pop-up window or click on "browse files from your computer" to open a file explorer and select the files you wish to upload.

-

For Cloud Storage Uploads (Google Cloud, Amazon S3, Azure Blob): After you select one of these options, you will be prompted to authenticate and select the specific bucket or container you wish to upload to. Follow the on-screen instructions for authentication and selection.

-

-

Once you have selected your files or connected to your cloud storage, you can review the details of your upload.

Note

- For large files or numerous files, the upload process may take some time depending on your internet connection and the size of the data.

- You must ensure that the files you upload do not contain any passwords or other sensitive data.

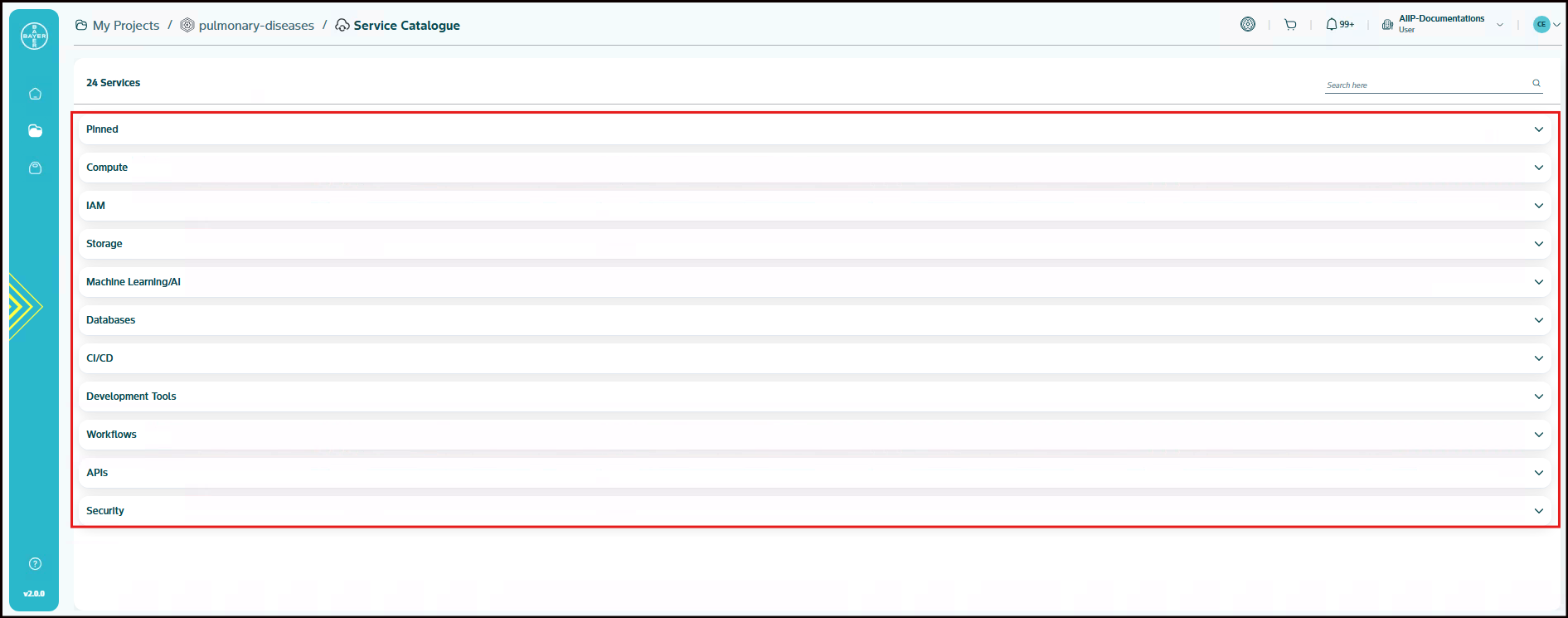

Service Category

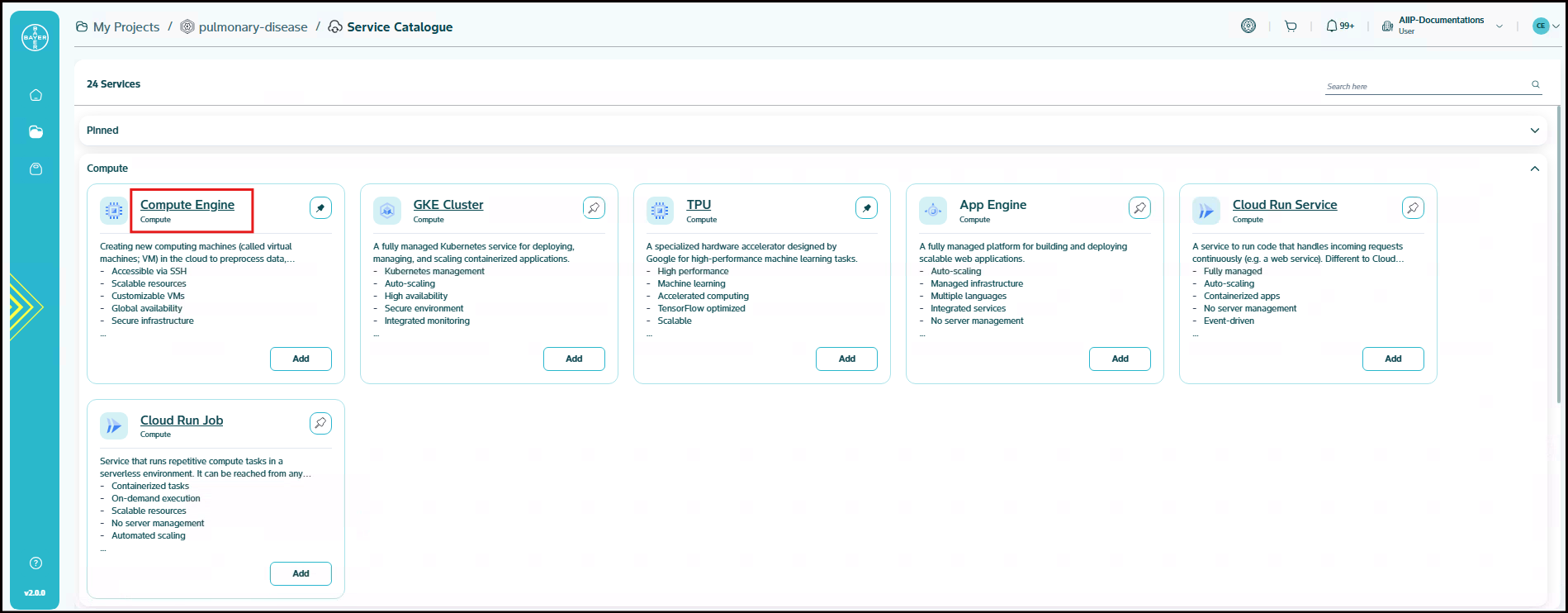

To enhance usability and simplify navigation, the available services are organized into categories based on their primary function. You can select and provision services from any group depending on your specific requirements.

The groups and their respective services are as follows:

-

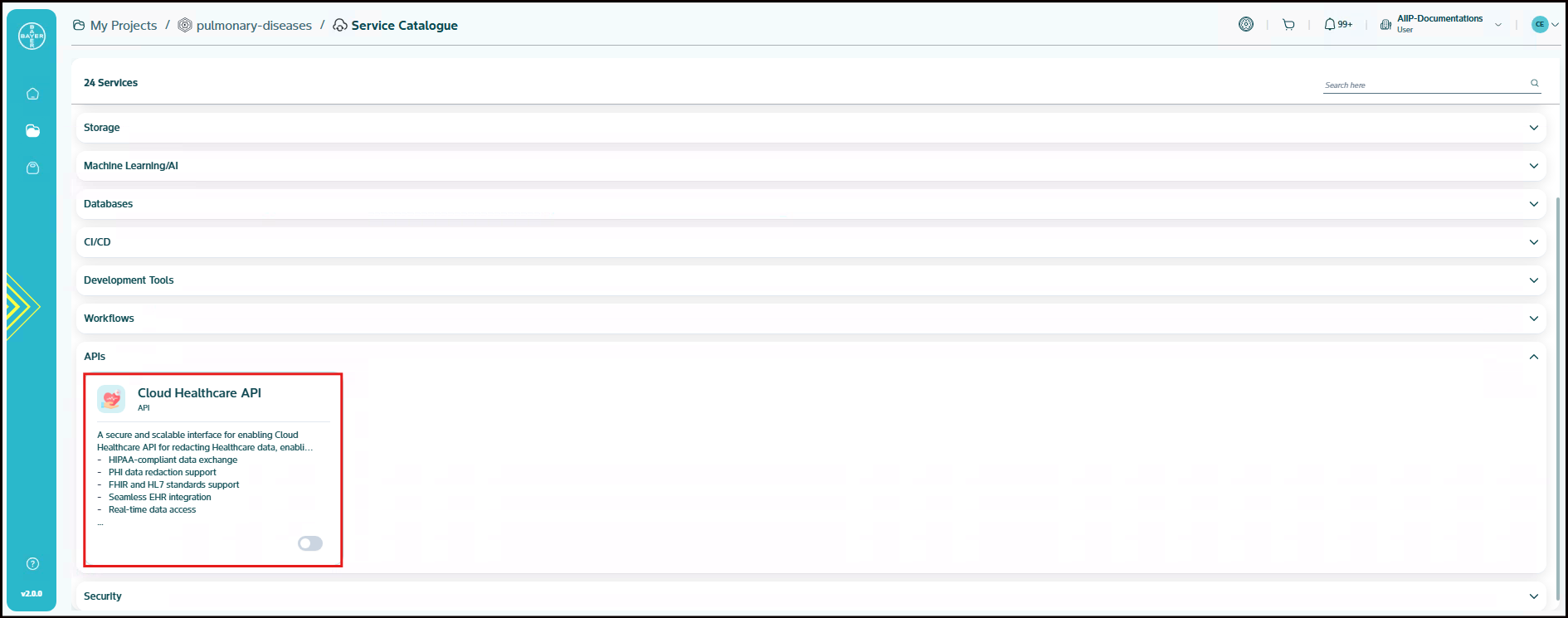

API: Includes the Cloud Healthcare API service that provides industry-standard protocols and formats for ingesting, storing, analyzing, and integrating healthcare data with cloud-based applications.

-

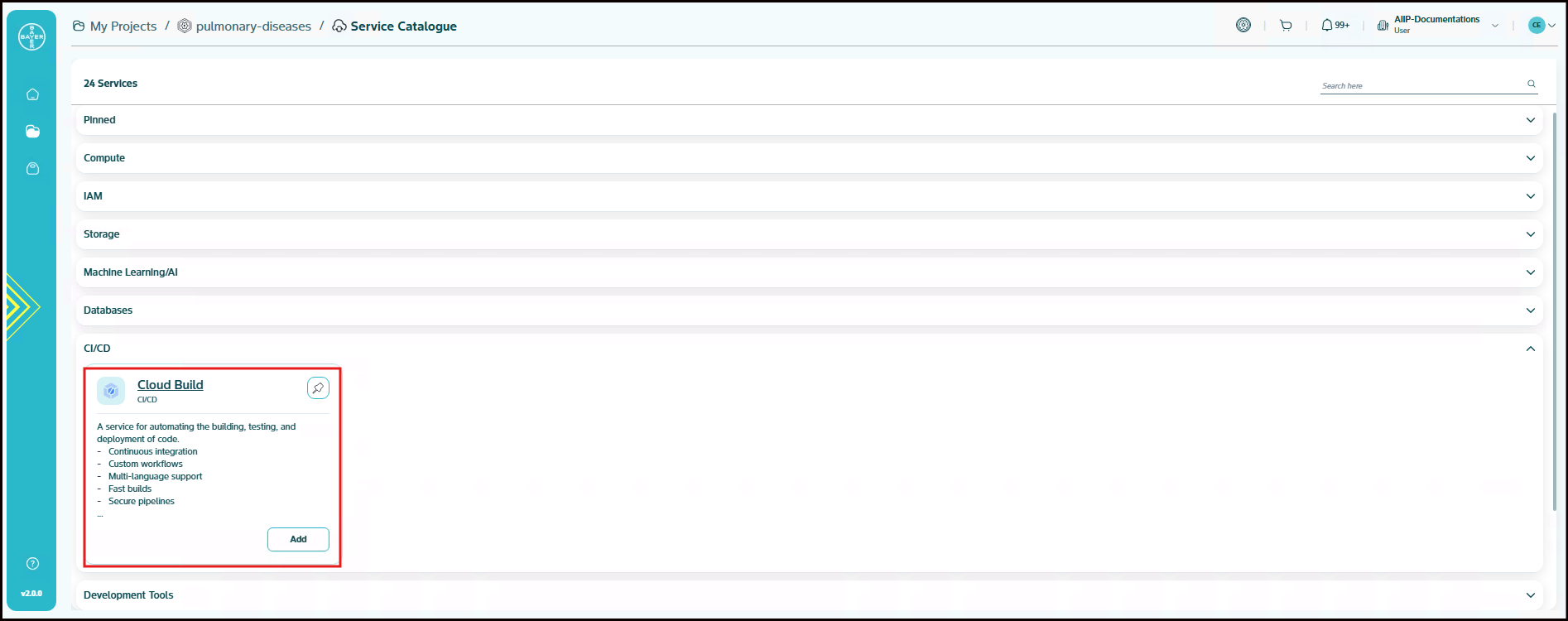

CI/CD (Continuous Integration / Continuous Deployment): Services for automating build and deployment workflows. This group include Cloud Build.

-

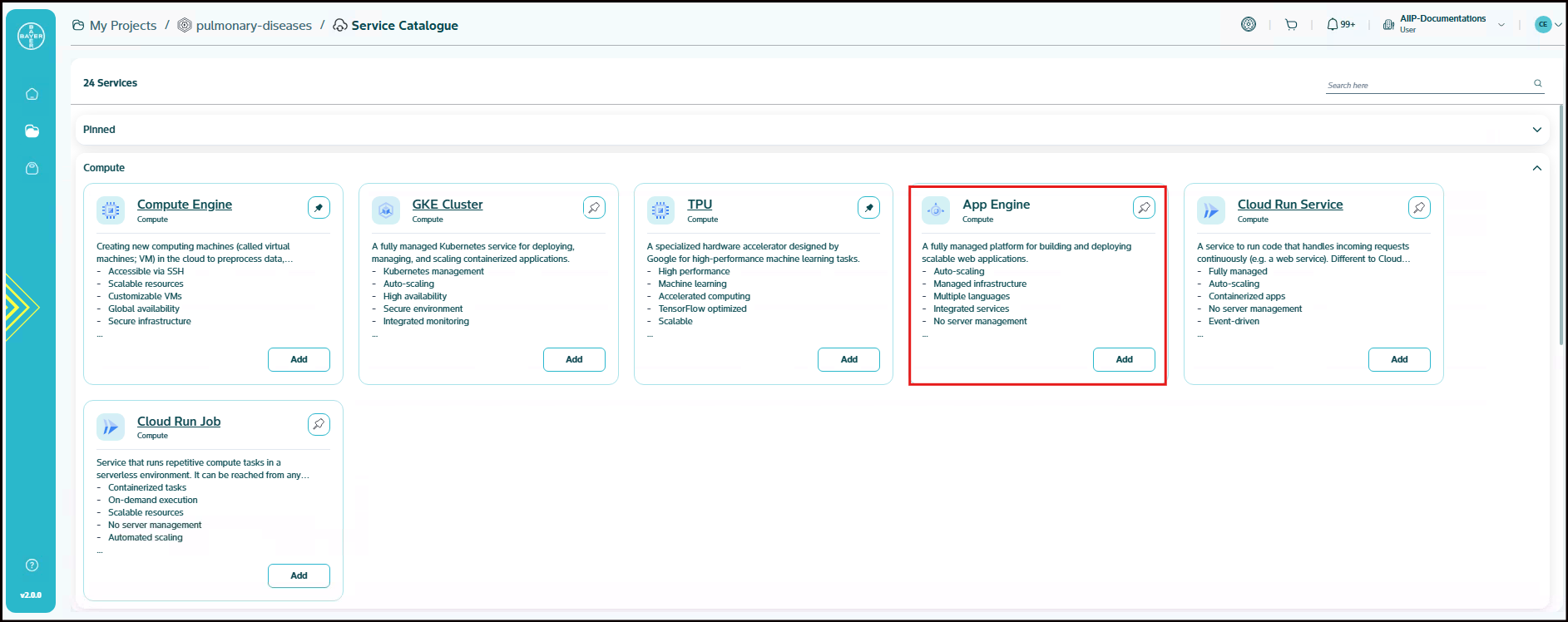

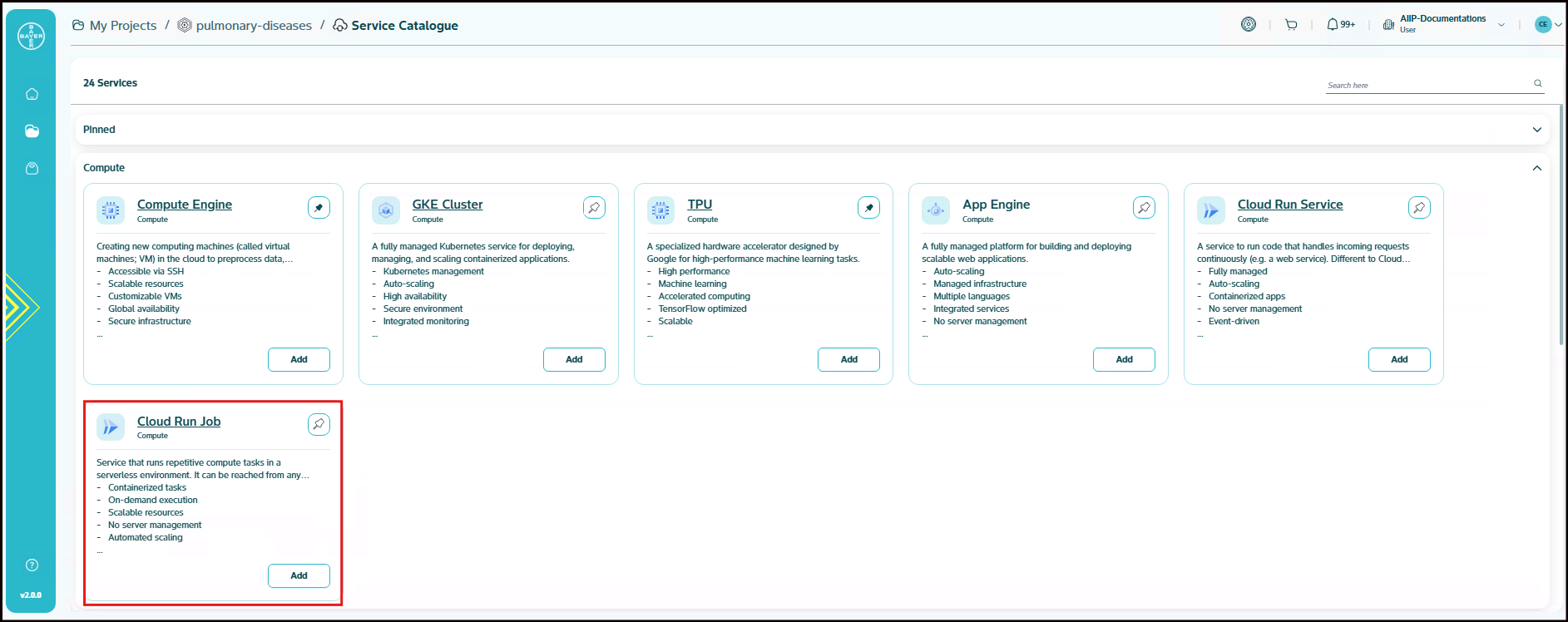

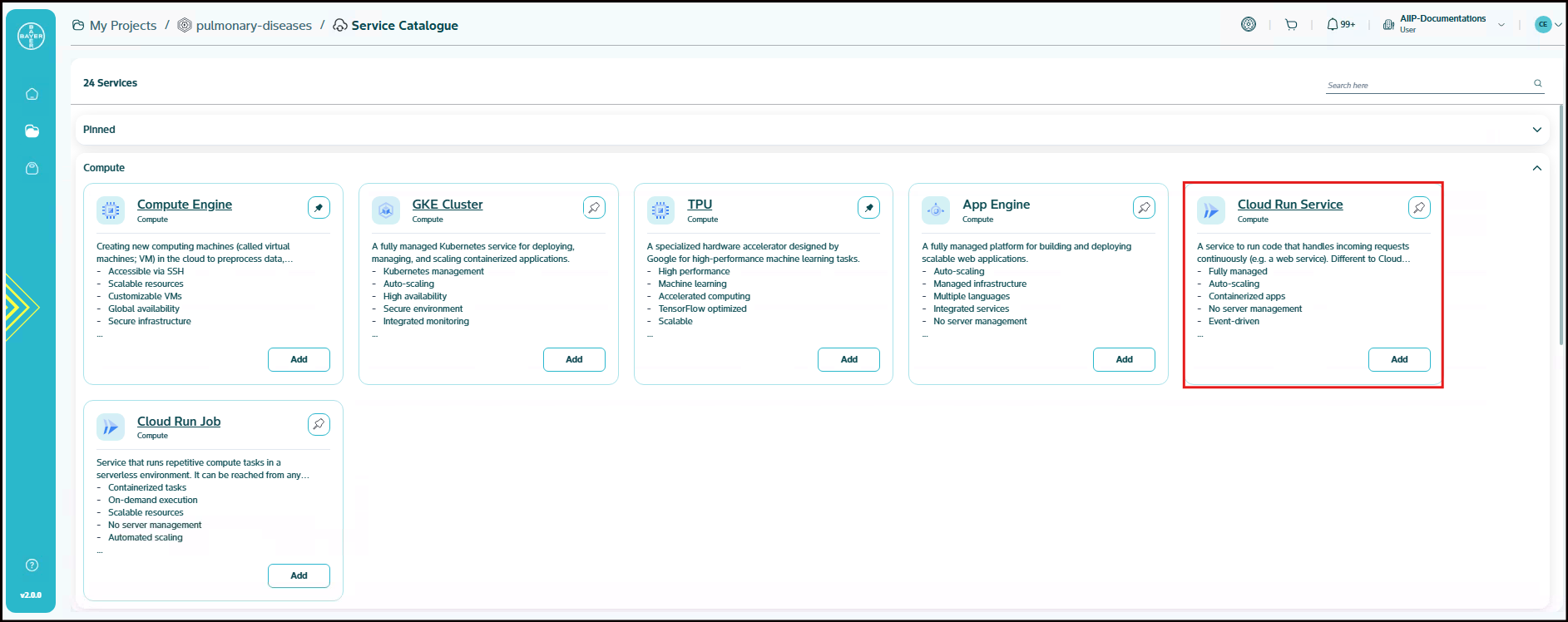

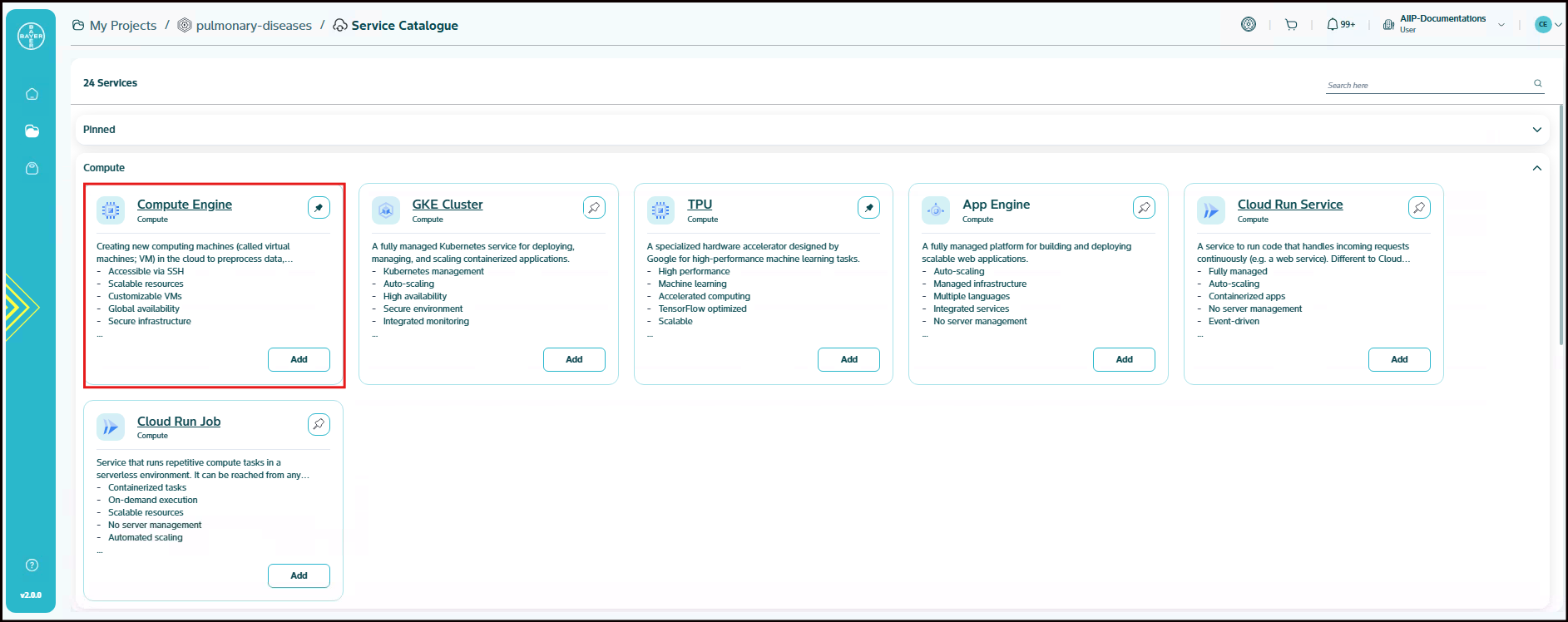

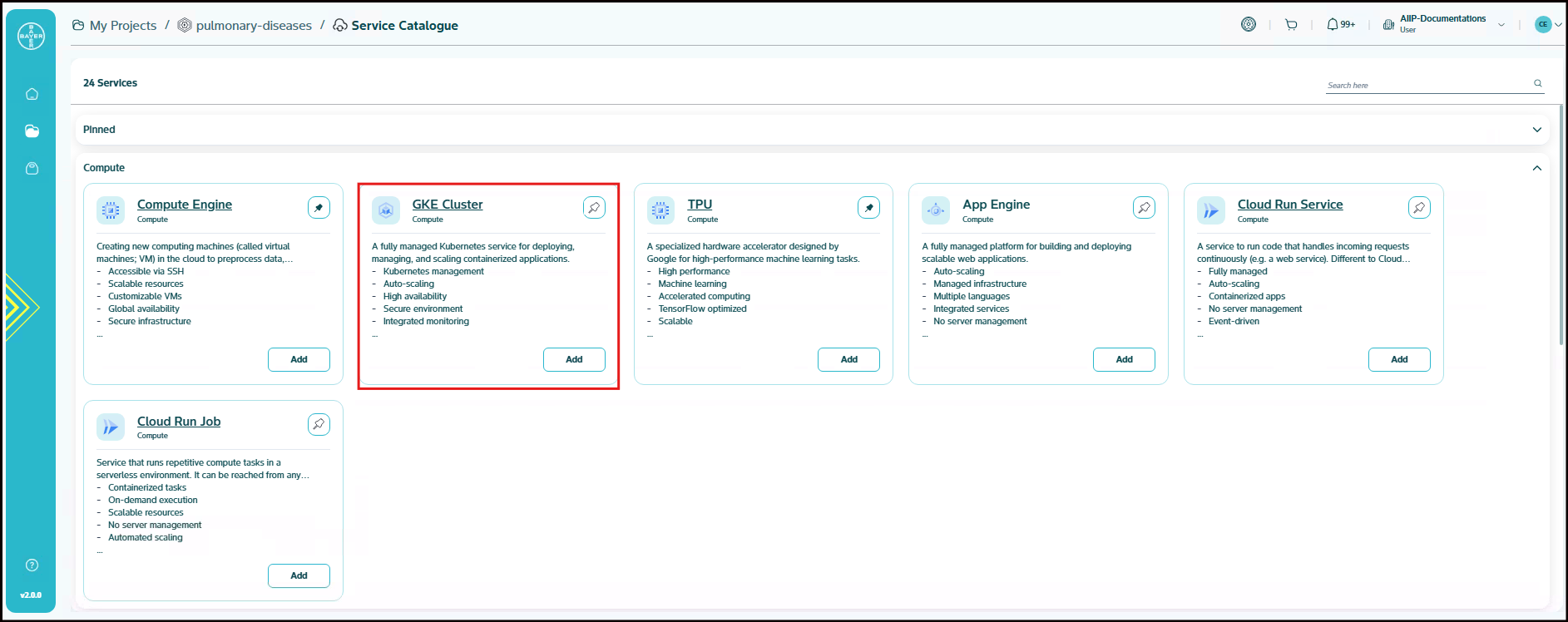

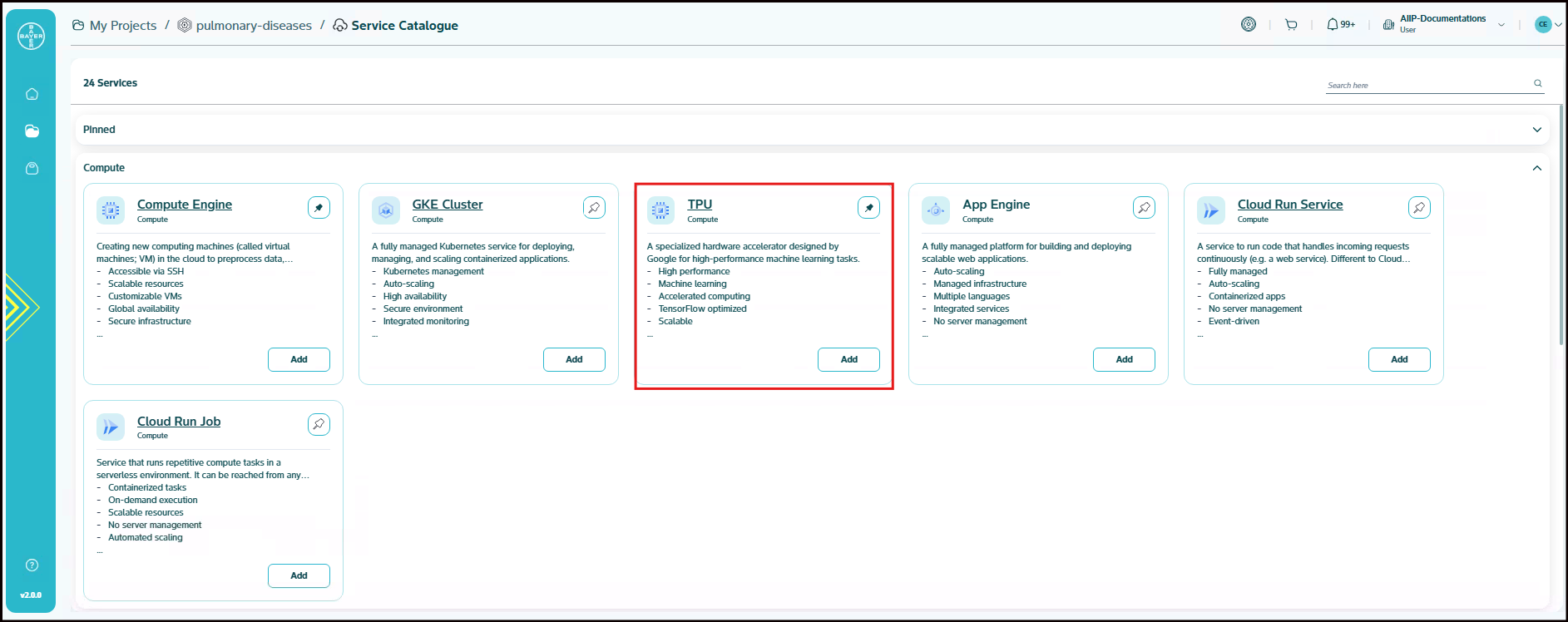

Compute: Includes services used to run applications and manage computing workloads. This group includes Compute Engine, GKE Cluster, TPU, App Engine, Cloud Run Service, and Cloud Run Job.

-

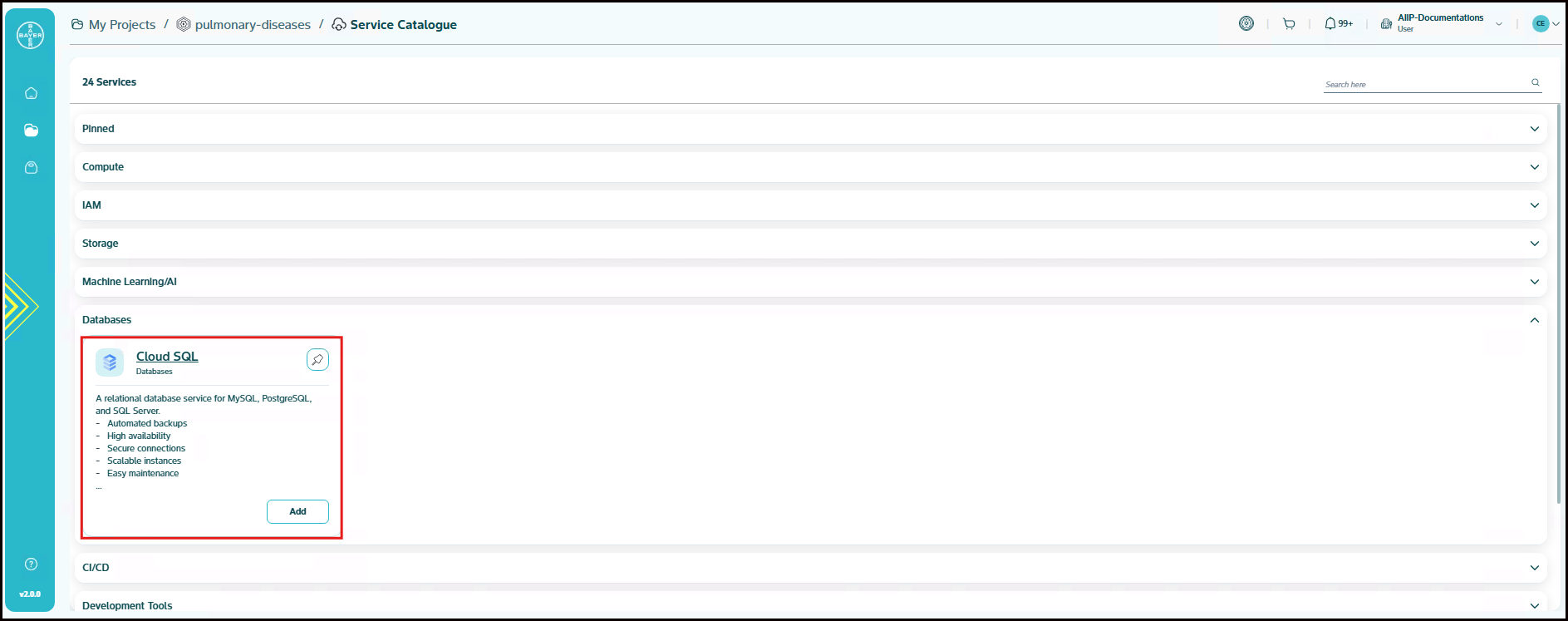

Databases: Managed database services for storing and querying structured data. This group include Cloud SQL.

-

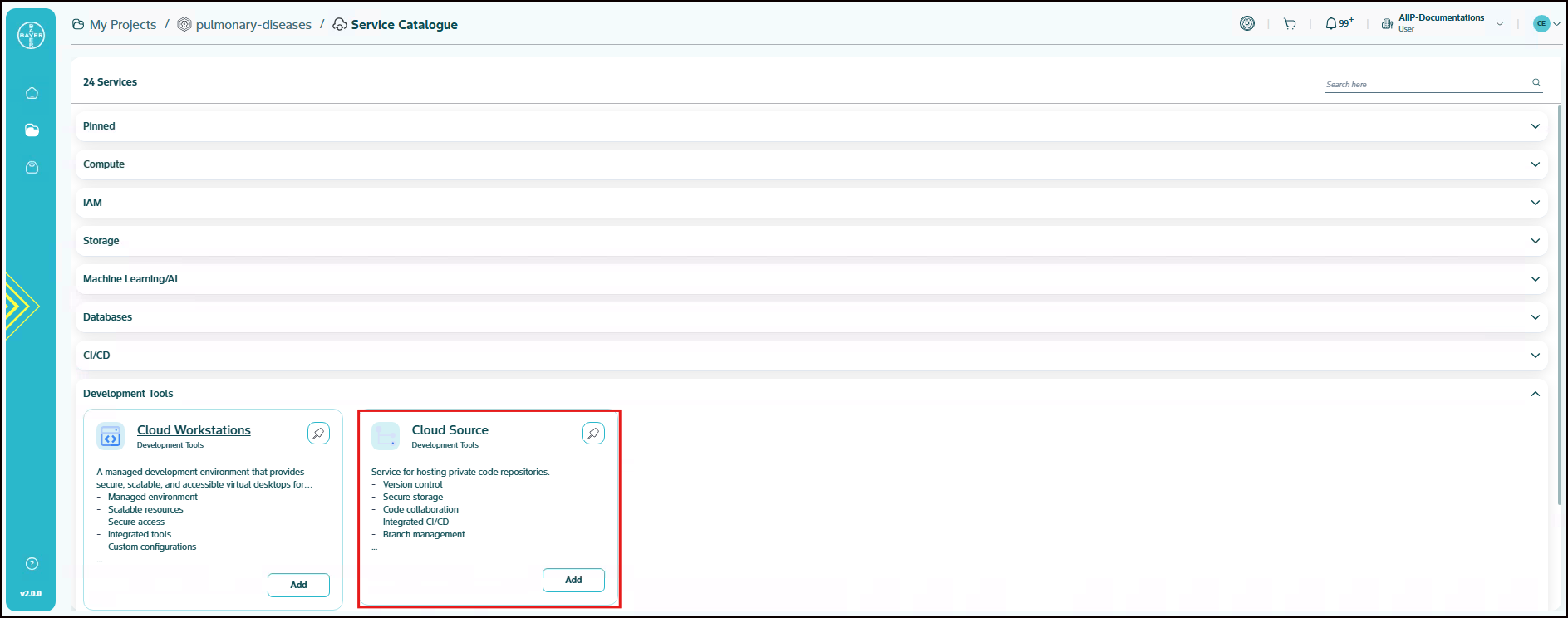

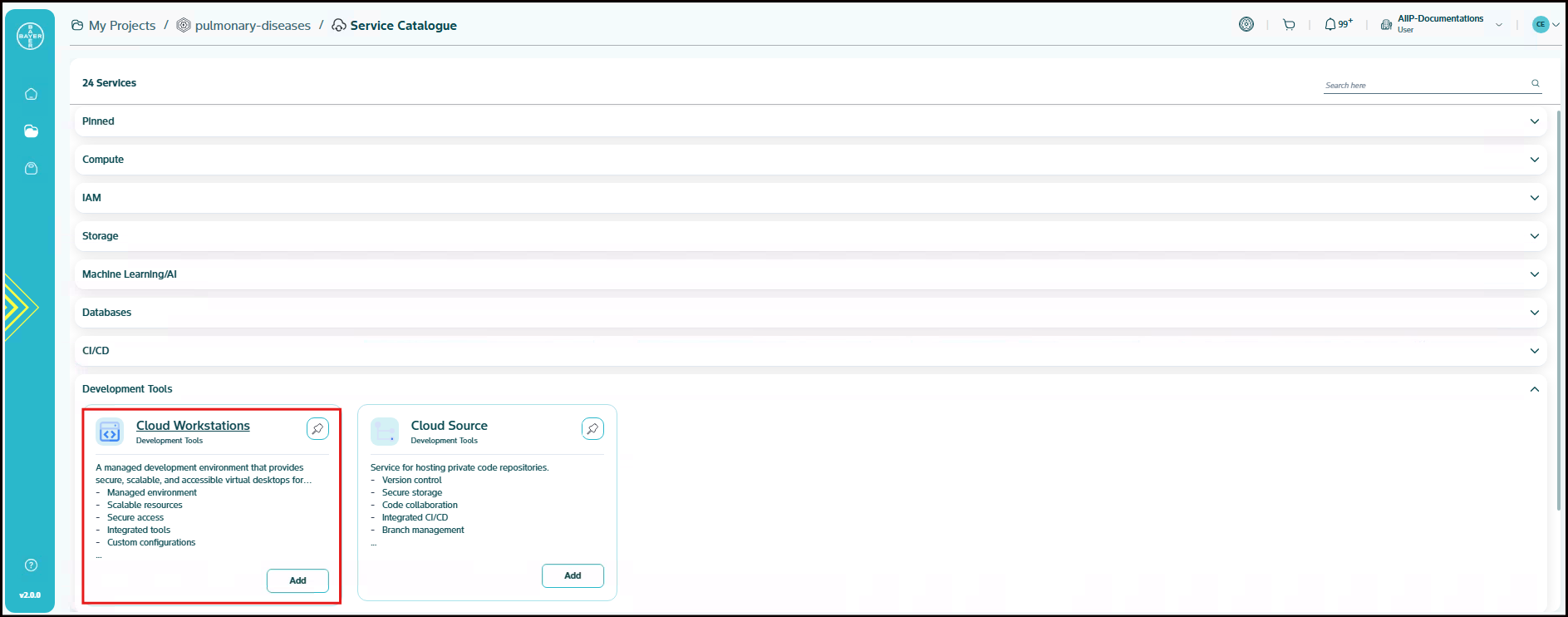

Development Tools: Tools to support code development and source management. This group includes Cloud Workstations and Cloud Source.

-

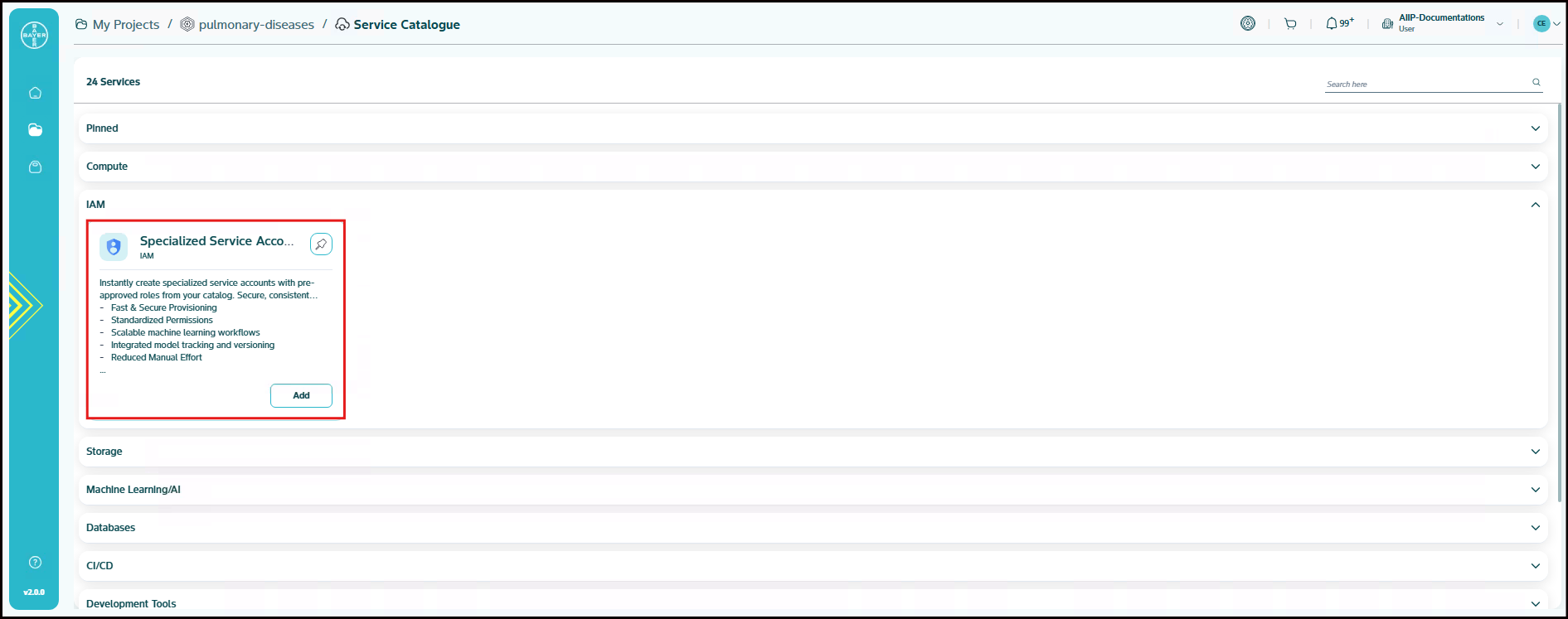

IAM: Tools to manage user identities and control their access to platform resources. This group included Specialized Service Accounts.

-

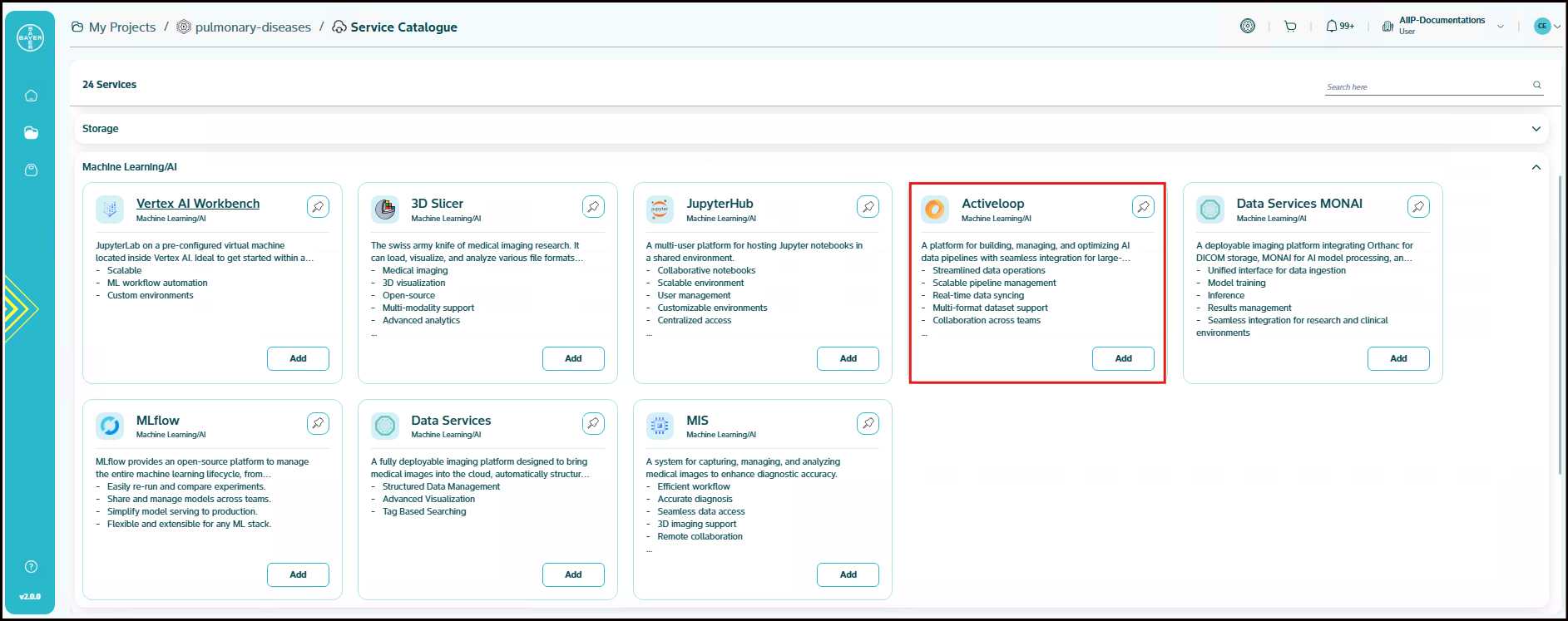

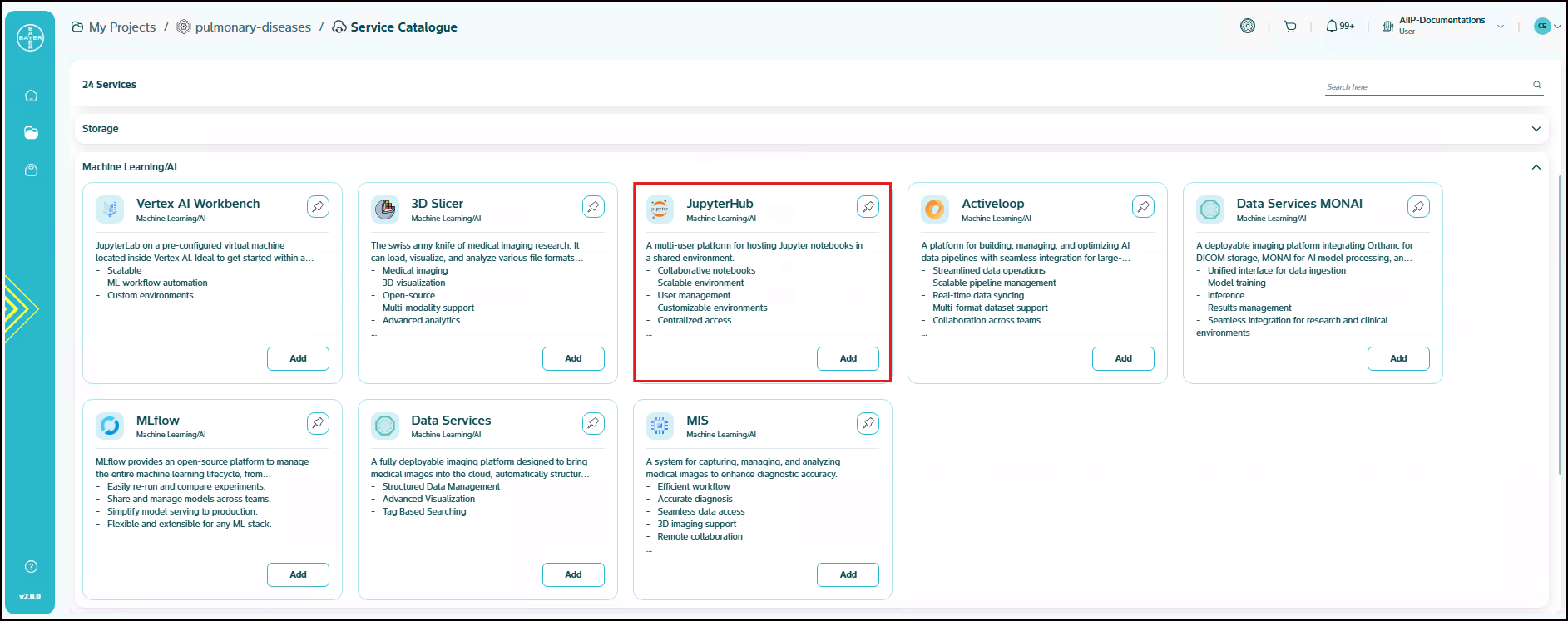

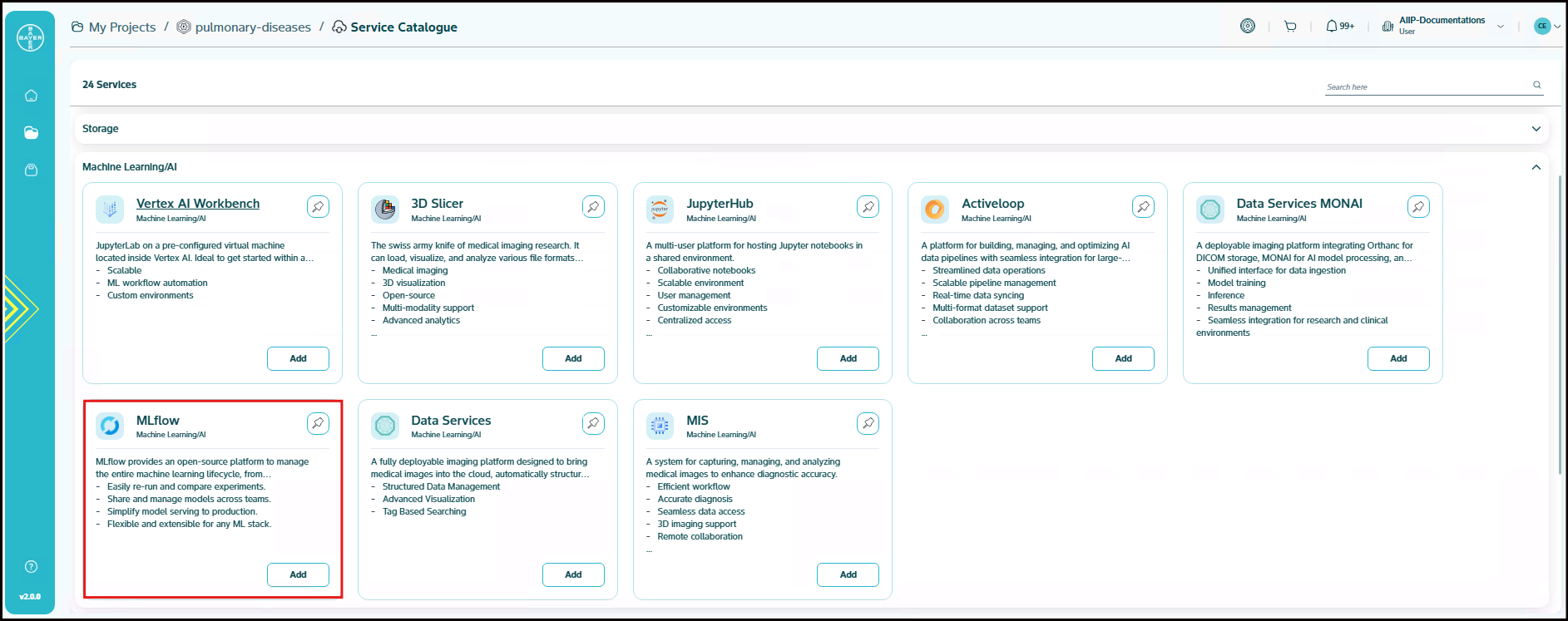

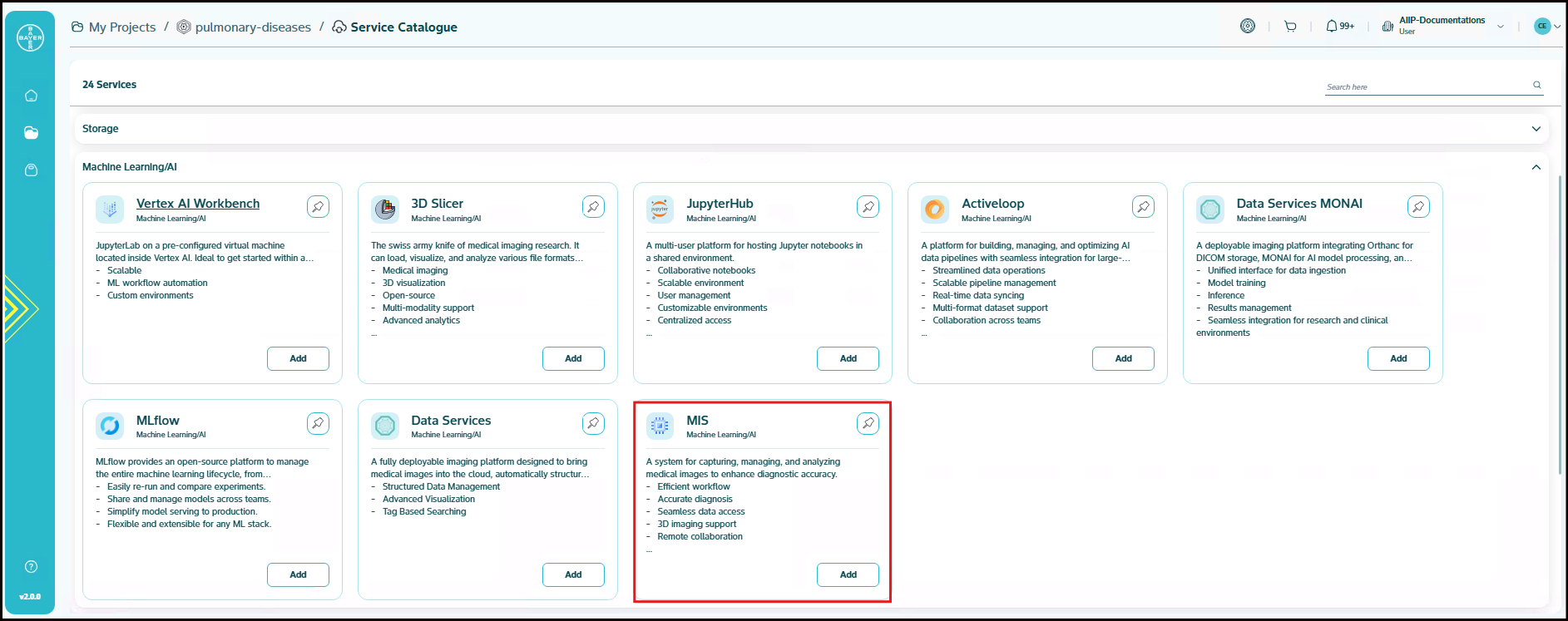

Machine Learning/AI: Tools and platforms supporting AI/ML model development, training, and visualization. This group includes Vertex AI Workbench, 3D Slicer, JupyterHub, MIS, Activeloop, Data service for MONAI, Deployable Package for Data Services and MLflow.

-

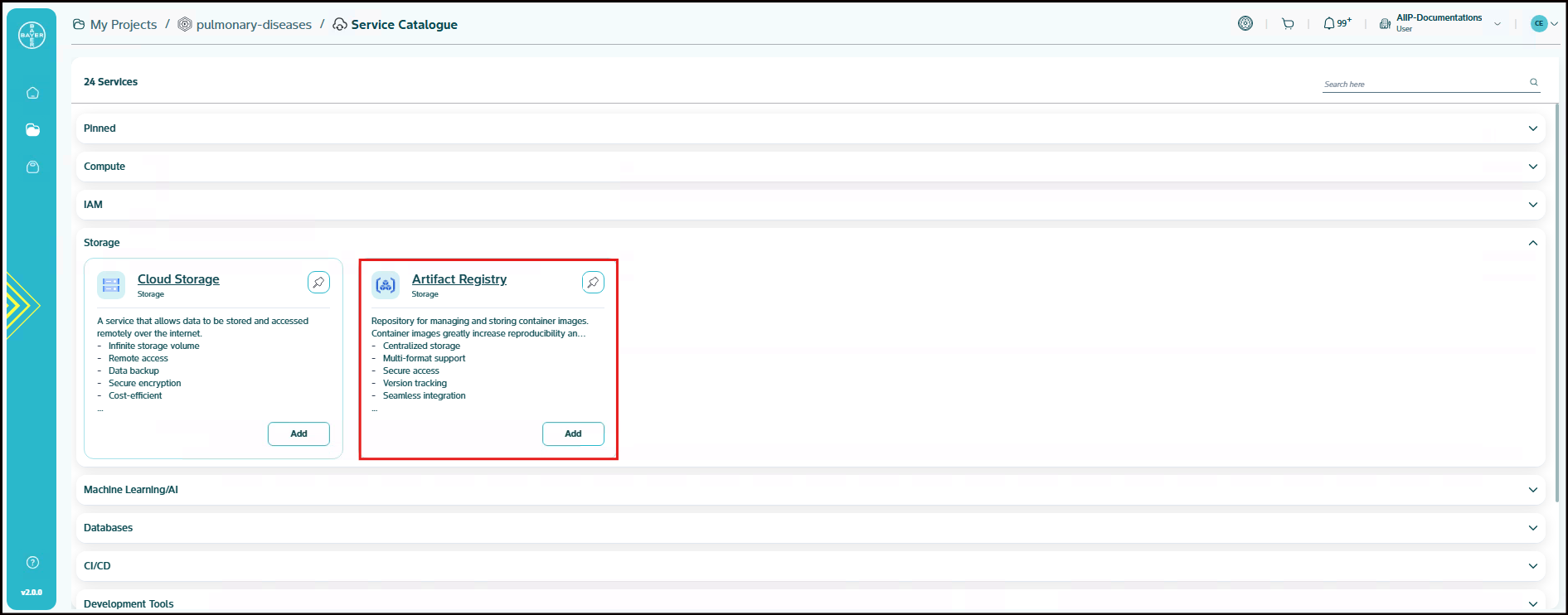

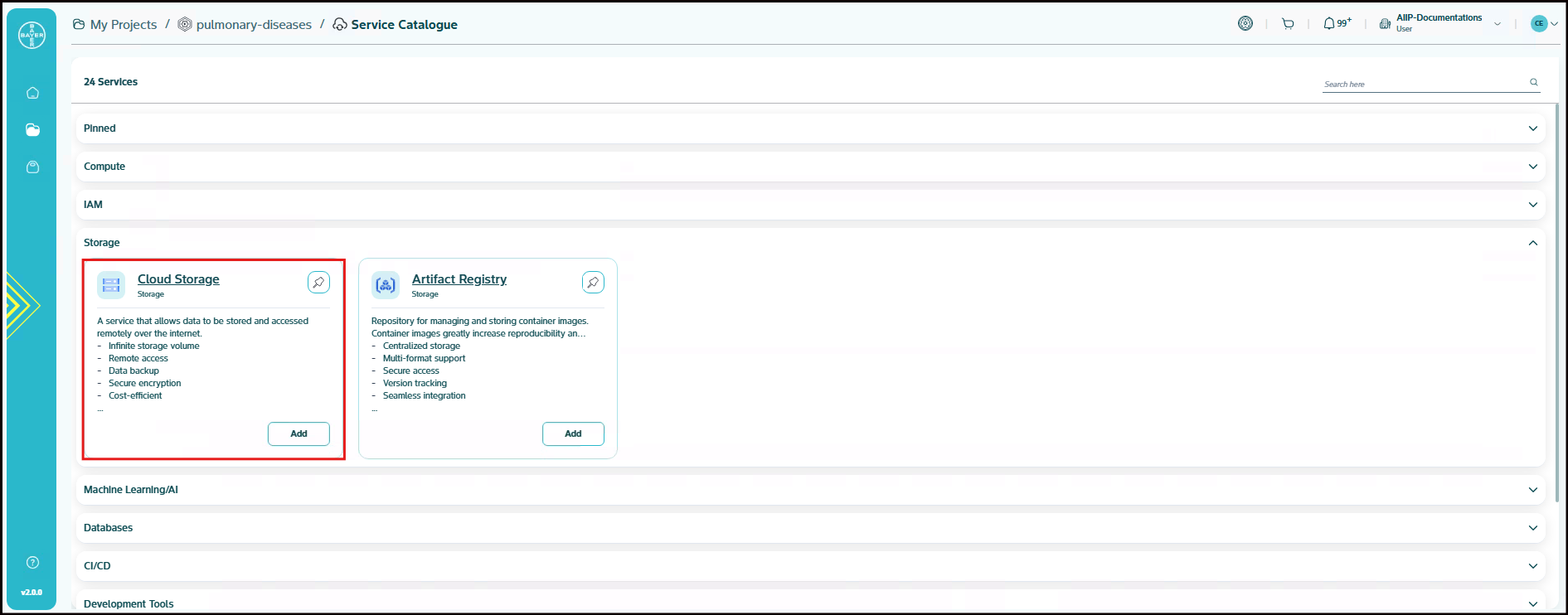

Storage: Services for storing and managing artifacts and data. This group includes Cloud Storage and Artifact Registry.

-

Workflows: Services for orchestrating and managing data pipelines or workflows. This group includes Cloud Composer.

Users are free to provision services from any category based on their project needs and use cases.

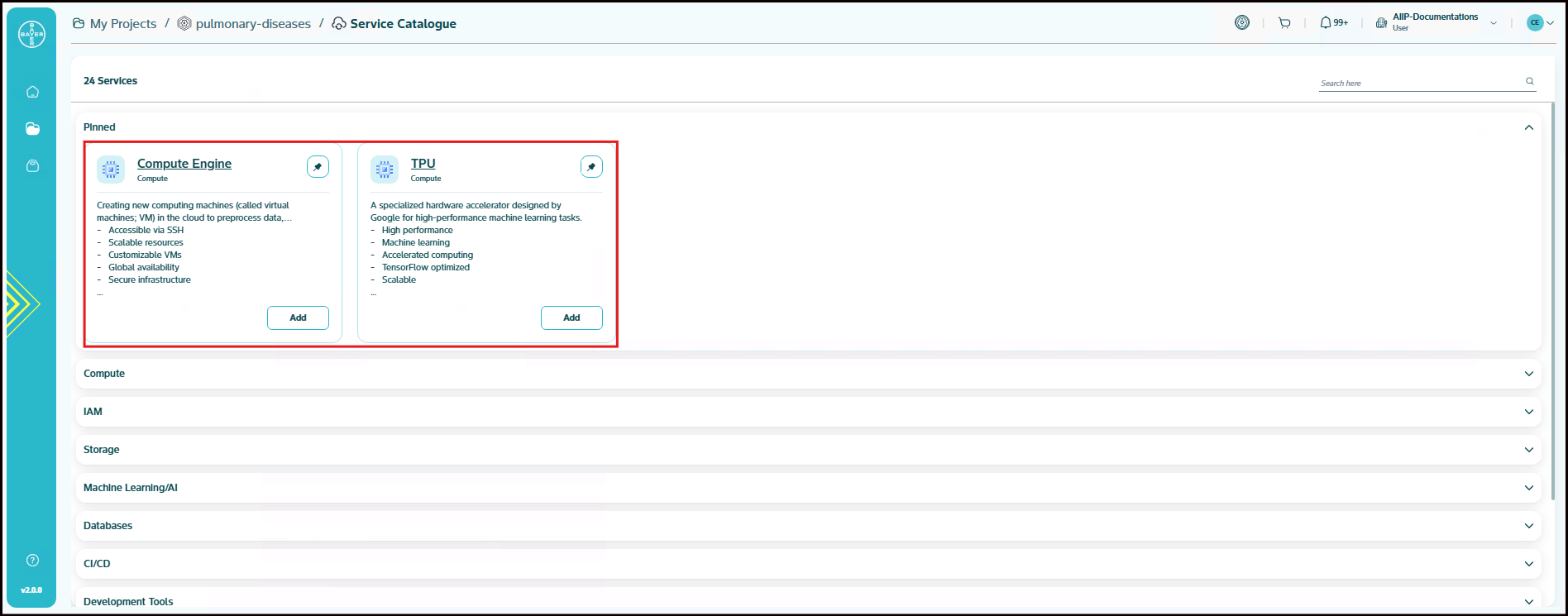

Pinned Services

To improve accessibility and streamline your workflows, the platform offers to pin your favorite services to the top of the service catalogue page.

You can pin a service by clicking the pin icon located on the top-right corner of the service tile. Once pinned, the selected services will appear under a dedicated "Pinned" section at the top of the catalogue page.

Pinned services will always remain at the top, making it easier to access frequently used options without scrolling through the full list. To unpin a service, simply click the pin icon again.

This feature is especially helpful for users who regularly work with a specific set of services and want quicker access.

Available services and their configurations

This section explains the available services and their configuration parameters. It also shows you how to access these services from the console.

API

Cloud Healthcare API

The Cloud Healthcare API is a Google Cloud Platform service that enables healthcare organizations to store, manage, and exchange healthcare data in the cloud. It acts as a bridge between existing healthcare systems and applications built on , supporting industry-standard data formats like FHIR, HL7v2, and DICOM. This allows for the integration of healthcare data with other Google Cloud Platform services for analysis, machine learning, and application development.

References

Enabling API ⧉

CI/ CD

Cloud Build

A service for automating the building, testing and deployment of code. Cloud Build is a service that executes our builds on Google Cloud infrastructure. Cloud Build can import source code from Cloud Storage, GitLab, GitHub, or Bitbucket, execute a build to our specifications and produce artifacts such as Docker containers or Java archives. Cloud Build executes our build as a series of build steps, where each build step is run in a Docker container. A build step can do anything that can be done from a container irrespective of the environment. To perform your tasks, we can either use the supported build steps provided by Cloud Build or write our own build steps.

- Continuous integration

- Custom workflows

- Multi-language support

- Fast builds

- Secure pipelines

- Scalable resources

- Artifact management

Cloud Build Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Resource Name | Name of the Cloud Build resource | Yes |

| Region | Region where the Cloud Build resource is located | Yes |

| Deploy To | Target location or service for deployment | Yes |

| Container Image URL | URL of the container image used in the build process | Yes |

Default Configurations

-

Pub/Sub Trigger: We configure a Pub/Sub topic that triggers the Cloud Build process. By default, this topic is set to gcr, meaning that Cloud Build will be triggered by messages published to this topic.

-

Substitution Variables: Substitution variables allow you to pass dynamic values during the build process. For example:

- _ACTION: Represents the action specified in the incoming Pub/Sub message (e.g., a build, tag, or deploy action).

- _IMAGE_TAG: Captures the image tag provided in the Pub/Sub message, ensuring the correct image version is used during the build process. User can update the build pipeline according to their container image if required.

-

Filter:

By default, the filter is set to match on the image tag (

_IMAGE_TAG.matches("")), but you can modify it to include more specific conditions based on your needs. The default settings ensures an automated deployment of new version of images as an when pushed to artifact registry. -

Build Step Image:

The Gcloud image used during the build process is defined as

"gcr.io/cloud-builders/gcloud". This image contains the necessary tools to execute Google Cloud commands, including managing Cloud Run services and jobs. -

Logging Options:

Logging options determine where Cloud Build logs are stored. The default setting is

"CLOUD_LOGGING_ONLY", ensuring all logs are sent to Google Cloud Logging for easy monitoring and troubleshooting. -

Build Timeout:

The timeout for the Cloud Build process is set to 540 seconds. If the build exceeds this time, it will be automatically terminated. This can be adjusted if longer build times are required for your specific workloads.

-

Labels:

Labels help organize and track your build resources. These labels can be applied to the virtual machines (VMs) that are created as part of the Cloud Build process, making it easier to manage and classify resources. You can specify custom labels such as project ownership, data classification, and more.

-

Custom Roles for Cloud Build:

A custom role called "Cloud Run Deployer" is configured with a set of permissions required to manage Cloud Run services and jobs. This role includes capabilities like:

-

Updating jobs, listing locations, managing revisions, accessing services, and creating logs.

-

Acting as a service account, allowing Cloud Build to securely interact with other Google Cloud services.

-

References

Cloud Build Overview ⧉

Compute

App Engine

A fully managed platform for building and deploying scalable web applications. Google App Engine is a fully managed, serverless platform designed to build and deploy scalable web applications. It abstracts the infrastructure management, allowing developers to focus purely on application development. App Engine automatically handles tasks such as provisioning servers, managing scaling, load balancing and applying updates, making it a powerful solution for building applications that can scale seamlessly based on demand.

- Auto-scaling

- Managed infrastructure

- Multiple languages

- Integrated services

- No server management

- Pay-per-use

- Version control

App Engine Parameters

The following are key parameters that must be configured when creating an App Engine instance:

| Parameter | Description | Mandatory |

|---|---|---|

| App Engine Name | Name of the App Engine service | Yes |

| Region | Region where the App Engine is deployed | Yes |

| CPU | CPU configuration for the App Engine | Yes |

| Memory | Memory allocated for the App Engine | Yes |

| Version ID | Version identifier for the App Engine | Yes |

| Container Image URL | URL of the container image used for deployment | Yes |

| Port | Port number used by the App Engine | Yes |

| Disk Size | Size of the disk allocated to the App Engine (in GB) | Yes |

| Service Account | Service account associated with the App Engine | No |

Default Configurations

-

Runtime Configuration:

- Runtime: The service will be deployed on the App Engine with a customizable runtime environment. By default, the runtime service name for the App Engine application is set to custom.

-

Scaling Settings:

-

Cooldown Period: A cooldown period of 120 seconds will be applied between scaling operations. This means the system will wait for 120 seconds before attempting to scale up or down, ensuring stable performance and avoiding rapid changes.

-

Target CPU Utilization: The target CPU utilization for automatic scaling is set to 0.5 by default, meaning scaling actions will trigger when CPU usage exceeds or falls below 50%. This helps maintain optimal performance and cost efficiency.

-

-

Traffic Splitting:

- Traffic Management: Using the

google_app_engine_service_split_trafficresource, we will manage and split traffic across different versions of your App Engine service. This allows you to direct a percentage of traffic to different versions without downtime or disruptions. By default 100% traffic is routed to latest version of the application.

- Traffic Management: Using the

Note

Cloud Run is the latest evolution of Google Cloud Serverless, building on the experience of running App Engine for more than a decade. Cloud Run runs on much of the same infrastructure as App Engine standard environment, so there are many similarities between these two platforms. Cloud Run is designed to improve upon the App Engine experience, incorporating many of the best features of both App Engine standard environment and App Engine flexible environment. Hence Cloud Run would be our recommendation instead of using App Engine.

References

Cloud Run Job

Service that runs repetitive compute tasks in a serverless environment. It can be reached from any machine in the project and is great for centralising certain functionalities (i.e. data conversion) within the development project. Cloud Run is a managed compute platform that enables you to run containers that are invocable via requests or events. Cloud Run is serverless: it abstracts away all infrastructure management, so we can focus on what matters most — building great applications. A service that allows you to run a container to completion without a server. This service only run its own tasks and exits when it is finished.

- Containerized tasks

- On-demand execution

- Scalable resources

- No server management

- Automated scaling

- Event-driven

- Pay-per-use

Cloud Run Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Job Name | Name of the Cloud Run Job | Yes |

| Region | Region where the job will be executed | Yes |

| No of Tasks | Number of tasks to run in the job | Yes |

| CPU | CPU allocation for each task | Yes |

| Memory | Memory allocation for each task | Yes |

| Service Account | Service account associated with the job | No |

| Container Image URL | URL of the container image used for the job | Yes |

| Parallelism | Number of tasks to run in parallel | Yes |

| Task Timeout | Timeout for each task | Yes |

| Time Unit | Unit of time for the task timeout (e.g., seconds, minutes) | Yes |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

Default Configurations

- Variables:

- max_retries: Defines the maximum number of retries allowed for the job in case of failures. The default value is set to 3.

- command: Specifies the command to be run inside the container. The default value is null, meaning no specific command is set by default, and the container will use its own startup process.

References

Cloud Run Jobs Overview ⧉

Cloud Run Service

A service to run code that handles incoming requests continuously (eg. a web service). Different to Cloud Run Job, that executes each job based on a trigger.

- Fully managed

- Auto-scaling

- Containerized apps

- No server management

- Event-driven

- Pay-per-use

- Fast deployment

Cloud Run Services Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Cloud Run Name | Name of the Cloud Run service | Yes |

| Region | Region where the Cloud Run service is located | Yes |

| CPU | CPU allocation for the service | Yes |

| Memory | Memory allocation for the service | Yes |

| Container Image URL | URL of the container image used for the service | Yes |

| Port | Port number used by the Cloud Run service | Yes |

| Service Account | Service account associated with the service | No |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

| Authentication Configuration | Set Cloud Run Service's Security | No |

Default Configurations

-

Module: Serverless Load Balancer

The

cloudrun_serverless_loadbalancermodule is configured to enable the seamless integration of a load balancer with the Cloud Run service. This ensures that incoming traffic is efficiently distributed across multiple instances, improving the availability and reliability of the service. -

Security Policy :

The

security_policyvariable is used to apply a Cloud Armor Security Policy to the Cloud Run service. Cloud Armor helps protect applications from security threats like DDoS attacks. The default value is set to an empty string, meaning no specific policy is enforced unless provided. -

Authentication Configuration:

Configure the security posture of the cloud run service by defining its access control model. These settings govern two distinct but related security layers:

- Network Ingress: Determines the network path for incoming traffic. Either expose the service publicly or restrict it to internal traffic originating from within the VPC, typically routed through a load balancer.

- Identity Authentication: Specifies whether the service requires cryptographic proof of a caller's identity. When enabled, it mandates that every request includes a valid, signed ID token (JWT) in the Authorization header, which is then validated against IAM permissions.

It has 3 catagories:

-

Internal (Authenticated): This setting restricts access to the internal VPC network, blocking all public traffic. Additionally, every request must be authenticated with a valid ID token from a user or service account that has been granted permission to invoke the service. This also makes the cloud run service an internal-only tool, but adds a layer of security. Even internal applications must provide a password or "key" to prove they have permission to use it.

- Endpoint: The GCP-generated Cloud Run URL, accessible only from within your VPC network.

- When to use: For secure internal microservices or backend APIs that require caller verification.

-

Public (Authenticated): This makes the cloud run service accessible from the public internet but secures it by requiring every request to be authenticated. This is ideal for creating secure public APIs that should only be accessed by specific users or other authorized applications.

- Endpoint: The GCP-generated Cloud Run URL, accessible over the internet and protected by IAM authentication.

- When to use: For secure public APIs where you want Google to handle authentication, not your application code.

-

Public ( Unauthenticated with Custom Domain): This configuration exposes cloud run service to the public internet via an External HTTP(S) Load Balancer mapped to the custom domain. The service is configured to allow unauthenticated invocations (equivalent to granting the run.invoker role to allUsers), meaning it does not validate for an IAM identity token in requests.

- Endpoint: Your custom domain's URL (e.g., app.your-company.com), served by an external HTTPS Load Balancer.

- When to use: For public websites or APIs where your application code is responsible for managing its own user authentication.

References

Cloud Run Service Overview ⧉

Host Cloud Run Service behind Load Balancer

Compute Engine

Creating new computing machines (called virtual machines or VMs) in the cloud to preprocess data, develop code, host smaller apps with a web frontend (e.g. tensor board, custom image viewer etc) and so on.

Google Compute Engine allows you to create and run virtual machines (VMs) on Google’s powerful infrastructure. Think of a virtual machine as a computer that runs entirely in the cloud—there’s no need to buy or maintain any physical hardware. You can start with just one virtual machine or scale up to thousands, depending on your needs. The best part? You only pay for what you use, with no upfront costs.

- Accessible via SSH

- Scalable resources

- Customizable VMs

- Global availability

- Secure infrastructure

- Automated backups

Compute Engine Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Instance Name | Name of the virtual machine | Yes |

| Region | Region where the VM will be deployed | Yes |

| Zone | Zone within the selected region | Yes |

| GPU Type | Type of GPU attached to the VM | Yes |

| GPU Count | Number of GPUs attached to the VM | Yes |

| Machine Type | Type of machine instance | Yes |

| Install Croissant | If checked, it will install Croissant Library | No |

| Boot Disk Image | Image used for the boot disk | Yes |

| Boot Disk Size | Size of the boot disk (in GB) | Yes |

| Boot Disk Type | Type of boot disk (e.g., SSD, HDD) | Yes |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

| Service Account | Service account associated with the VM | No |

Google Cloud Platform:

- Users can access the instances by SSH'ing to the instances either via Browser SSH by clicking the

SSHbutton available on the compute vm console or gcloud SDK. -

Users can use IAP desktop or IAP tunnel to access their VMs.

For more details and steps to click here.

Default Configurations

Network Tags:

- allow-iap: This network tag ensures that Identity-Aware Proxy (IAP) is enabled, which helps secure your VM instances by requiring authentication and authorization before access. It is required to SSH into the instance. If not applied, the instance will not allow SSH traffic.

Metadata:

- install-nvidia-driver: Automatically installs NVIDIA drivers if GPU support is needed for the VM, ensuring optimized GPU performance.

Default Settings:

Several default settings are applied to ensure the VMs are secure, manageable, and meet operational standards:

- VM with Internal IP Only: By default, the VM is configured with an internal IP, providing connectivity within the VPC network while keeping the instance isolated from public internet traffic.

- Service Account: Each VM instance is automatically attached to a service account, which provides the necessary permissions to access Google Cloud resources securely. If service account parameter is blank while provisioning instance it will use custom service account created by the platform. In case of user provides an existing service account of their choice that service account is used.

-

KMS Key Integration: The VM is integrated with Cloud KMS (Key Management Service) to encrypt sensitive data and ensure secure handling of any confidential information.

-

Machine Configurations: While we are working on introducing more machine configurations for compute instances, users can still opt for a different configuration by provisioning the services with basic configurations from our platform and editing the instance configuration from Google Cloud Platform compute engine section.

-

Croissant Library: Croissant is a high-level format for machine learning datasets that combines metadata, resource file descriptions, data structure, and default ML semantics into a single file; it works with existing datasets to make them easier to find, use, and support with tools. It can be installed by enabling the 'Install Croissant' checkbox. It will install inside '/opt/conda/' path. It will install using the startup-script.

Compliance requirements for Compute Engine configurations:

- Ensure that no external IP is attached to the instances. Raise exceptions with support team if any requirements.

- It is recommended to use CMEK or customer supplied encryptions for disks when working with regulated projects.

- Ensure effective labels are not modified on the instances

References

Edit Compute Engine Machine Type ⧉

GKE Cluster

Google Kubernetes Engine (GKE) is a fully managed Kubernetes service for deploying, managing and scaling containerized applications. Kubernetes, the leading container orchestration platform, automates the deployment, scaling and operation of application containers across clusters of hosts. With GKE, you can benefit from Google Cloud’s security, reliability and scalability while focusing on your applications without managing the underlying infrastructure.

- Kubernetes management

- Auto-scaling

- High availability

- Secure environment

- Integrated monitoring

- Easy upgrades

- Custom configurations

GKE Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| GKE Cluster Name | Name of the GKE Cluster | Yes |

| Region | Region where the GKE Cluster is located | Yes |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

| Service Account | Service account associated with the GKE Cluster | No |

| IP Range Pods | IP range for the pods in the cluster | No |

| IP Range Services | IP range for the services in the cluster | No |

| Auto Scaling | Auto scaling configuration for the cluster | No |

| Primary Node Pool Name | Name of the primary node pool | Yes |

| Primary Node Pool Minimum Count | Minimum number of nodes in the primary node pool | Yes |

| Primary Node Pool Maximum Count | Maximum number of nodes in the primary node pool | No |

| Primary Node Pool Machine Type | Type of machine instances in the primary node pool | No |

| Enable Extra Node Pool | Whether to enable an extra node pool | No |

| Secondary Node Pool Name | Name of the secondary node pool | No |

| Secondary Node Pool Minimum Count | Minimum number of nodes in the secondary node pool | No |

| Secondary Node Pool Maximum Count | Maximum number of nodes in the secondary node pool | No |

| Secondary Node Pool Machine Type | Type of machine instances in the secondary node pool | No |

Default Configurations

-

Labels:

- cluster_name: A label used to identify the GKE cluster, making it easier to organize and manage multiple clusters within your environment.

- node_pool: This label tracks the specific node pool to which the nodes belong, providing better visibility and management over different node pools.

-

Master IPv4 CIDR Block:

- master_ipv4_cidr_block: Specifies the IP range in CIDR notation for the GKE master network. This helps in defining network isolation for the GKE master nodes.

-

Horizontal Pod Autoscaling:

- horizontal_pod_autoscaling: This configuration enables horizontal pod autoscaling in the GKE cluster, allowing pods to scale automatically based on CPU usage or other metrics. The default is true to optimize resource utilization.

-

Maintenance Recurrence:

- maintenance_recurrence: Defines the frequency of the recurring maintenance window in RFC5545 format. This helps automate regular cluster maintenance like patching and updates.

-

Remove Default Node Pool:

- remove_default_node_pool: If set to true, this will remove the default node pool during cluster setup, allowing for custom node pool configurations.

-

Node Pools Labels:

- node_pools_labels: A map of maps that allows you to specify custom labels for nodes in different node pools, enhancing node management and classification.

-

Google Compute Engine Persistent Disk CSI Driver:

- gce_pd_csi_driver: Enables the Google Compute Engine Persistent Disk Container Storage Interface (CSI) Driver. This allows Kubernetes workloads to dynamically provision and manage persistent disks. By default, this feature is enabled.

-

Identity Namespace:

- identity_namespace: Specifies the workload pool for attaching Kubernetes service accounts. The default value is set to enabled, automatically configuring the project-based pool (

[project_id].svc.id.goog), improving security and access control.

- identity_namespace: Specifies the workload pool for attaching Kubernetes service accounts. The default value is set to enabled, automatically configuring the project-based pool (

References

TPU

A specialized hardware accelerator designed by Google for high-performance machine learning tasks. Tensor Processing Units (TPUs) are Google's custom-developed application-specific integrated circuits (ASICs) used to accelerate machine learning workloads. Cloud TPUs allow you to access TPUs from Compute Engine, Google Kubernetes Engine and Vertex AI.

- High performance

- Machine learning

- Accelerated computing

- TensorFlow optimized

- Scalable

- Low latency

- Cost-efficient

TPU VM Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| VM Name | Name of the TPU virtual machine | Yes |

| Region | Region where the TPU VM is located | Yes |

| Zone | Zone within the selected region | Yes |

| Accelerator Type | Type of TPU accelerator | Yes |

| Runtime Version | Version of the TPU runtime | Yes |

| TPU VM Type | Type of TPU VM | No |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

| Service Account | Service account associated with the TPU VM | No |

Default Configurations

-

Tags:

- allow-iap: Enables secure access to your TPU instance using Identity-Aware Proxy (IAP), ensuring controlled access.

-

Private Instance:

- By default no public IP is attached to the TPU instance to ensure instance is not accessible over the network.

References

Databases

Cloud SQL

A relational database service for MySQL, PostgreSQL and SQL Server. Cloud SQL is a fully-managed database service that helps us set up, maintain, manage and administer our relational databases on Google Cloud Platform.

- Automated backups

- High availability

- Secure connections

- Scalable instances

- Easy maintenance

- Integrated monitoring

Cloud SQL Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Cloud SQL Name | Name of the Cloud SQL instance | Yes |

| Region | Region where the Cloud SQL instance is located | Yes |

| Database Version | Version of the database | Yes |

| Edition | Edition of the database (e.g., Enterprise) | Yes |

| Database Name | Name of the database | Yes |

| Username | Username for accessing the database | Yes |

| Tier | Tier of the Cloud SQL instance (e.g., db-f1-micro) | Yes |

| Backup Location | Location for database backups | Yes |

| Disk Size | Size of the disk (in GB) | Yes |

| Disk Type | Type of disk (e.g., SSD, HDD) | Yes |

Default Configurations

-

Database Flags: We’ve introduced database-specific flags to enhance the configuration of various database engines:

-

PostgreSQL:

-

log_duration: Enables logging of the duration of each statement.

-pgaudit.log: Logs all activities for audit purposes.

-log_hostname: Logs the hostname of the client connecting to the instance.

-log_checkpoints: Enables checkpoint logging. -

MySQL:

-

general_log: Enables logging of general queries for troubleshooting.

-skip_show_database: Restricts theSHOW DATABASEcommand to authorized users.

-wait_timeout: Sets the wait timeout to manage inactive connections. -

SQL Server:

-

1204: Enables deadlock logging.

-remote_access: Allows remote connections to the SQL server.

-remote_query_timeout: Configures the timeout for remote queries to 300 seconds.

-

-

Private Network Configuration:

- private_network_url : This variable specifies the private network URL for securely connecting to the Cloud SQL instance within your network.

-

Maintenance Window: To minimize downtime, the maintenance window is configured as follows:

- Day: Sunday

- Hour: Midnight (UTC)

- Update Track: Set to "stable" to receive stable updates.

-

SSL Certificate Management: We’ve automated the management of SSL certificates for secure communication:

- Client Certificate: SSL certificates are created and managed for each SQL instance using Google Secret Manager to ensure encrypted communication.

- Password Management: Random, complex passwords are generated and securely stored using Secret Manager.

- Secret Storage: All sensitive information, including certificates and credentials, is stored in Secret Manager for added security.

References

Cloud SQL Overview ⧉

Connect to Cloud SQL from GKE

Connect to SQL from Cloud Run

Development Tools

Cloud Source

Service for hosting private code repositories. Cloud Source Repositories are fully featured, private Git repositories hosted on Google Cloud.

- Version control

- Secure storage

- Code collaboration

- Integrated CI/CD

- Branch management

- Access control

- Scalable hosting

Cloud Source Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Repository Name | Name of the Cloud Source repository | Yes |

Default Configurations No default configurations have been implemented.

References

Cloud Source Repository CLI ⧉

Cloud Workstations

A managed development environment that provides secure, scalable and accessible virtual desktops for coding. Cloud Workstations provides preconfigured, customizable and secure managed development environments on Google Cloud. Cloud Workstations is accessible through a browser-based IDE, from multiple local code editors (such as IntelliJ IDEA Ultimate or VS Code), or through SSH. Instead of manually setting up development environments, we can create a workstation configuration specifying environment in a reproducible way

- Managed environment

- Scalable resources

- Secure access

- Integrated tools

- Custom configurations

- Remote development

- Collaborative features

Cloud Workstation Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Instance Name | Name of the Cloud Workstations instance | Yes |

| Region | Region where the instance is located | Yes |

| GPU Type | Type of GPU attached to the instance | Yes |

| GPU Count | Number of GPUs assigned | Yes |

| Machine Type | Type of machine instance | Yes |

| Boot Disk Image | Image used for the boot disk | Yes |

| Boot Disk Size | Size of the boot disk (in GB) | Yes |

| Boot Disk Type | Type of boot disk (e.g., SSD, HDD) | Yes |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

| Service Account | Service account associated with the instance | No |

References

IAM

Specialized Service Account

A Specialized Service Account is a special type of non-human identity designed to provide a secure and manageable way for your applications, services, and automation tools to interact with our platform. Think of it as a dedicated identity for your code, allowing it to authenticate and perform actions on your behalf. It is a secure, consistent, and fully automated access management for your applications and services.

The given catalogue either creates a specialized account if it is not existing. If the given specialzed service account exists, it will add new roles to the service account.

Specialized Service Account Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Service Account | Name of the service account name | Yes |

| Roles | Roles needed to be attached | Yes |

Default Configurations

-

Service Account: It is recommended to use descriptive name for the service account. Note that, the name should have atleast 6 characters and maximum 30 characters length. The service account gets created in the selected tenant project.

-

Roles: At a time only 50 roles can be added. To add more than 50 roles, first create the specialized service account with 50 roles then again give the name and remaining roles. Note that, higher level permission like Billing permissions, etc. has been removed.

Machine Learning/AI

Activeloop

Activeloop provides a multimodal AI-native database called Deep Lake that serves as a data infrastructure for AI, allowing organizations to store, search, and analyze vast amounts of text, image, and video data. It streamlines data management for AI by offering an intuitive platform to query data in natural language or SQL, automate indexing, and manage dataset versions like Git, enabling faster and more accurate AI model development and deployment. Activeloop NeoHorizon self-hosted docker-compose deployment with multiple configuration options for different deployment scenarios.

- Multimodal search across formats

- Automated data indexing

- Fast and accurate retrieval

- Built for AI data analysis

Activeloop Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| VM Name | Name of the virtual machine | Yes |

| Region | Region where the VM will be deployed | Yes |

| Zone | Zone within the selected region | Yes |

| GPU Type | Type of GPU attached to the VM | Yes |

| GPU Count | Number of GPUs attached to the VM | Yes |

| Machine Type | Type of machine instance | Yes |

| Activeloop API Token | Token generated from Activeloop platform | Yes |

| Machine Type | Oauth API token from the Google Cloud Platform project | Yes |

| Boot Disk Image | Image used for the boot disk | Yes |

| Boot Disk Size | Size of the boot disk (in GB) | Yes |

| Boot Disk Type | Type of boot disk (e.g., SSD, HDD) | Yes |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

| Service Account | Service account associated with the VM | No |

Default Configurations

-

Virtual Machine: It is a Virtual Machine with Google Cloud Platform attached to it. With the pre-requisites, mentioned below:

- Docker Engine 20.10+

- Docker Compose 2.0+

- At least 8GB RAM available

- NVIDIA GPU with CUDA support (optional, for model services)

!!! Note: - After, the setup is created. It takes an additional 25-30 minutes for the service to be up. - The disk should be greater than 200 GB.

-

Load Balancer: Each services are hosted behind a regional internal proxy network loadbalancer which follows same name as instance/service deployed. You can view the load balancers in Google Cloud Platform console.

-

Activeloop API Token:

-

To create, we need do to the following steps:

- Sign up at chat.activeloop.ai ⧉

- Navigate to ⚙️ → API tokens, and create a token.

- Provide this token in the Activeloop service catalog form through the UI, which will then be exported to the .env file.

-

To rotate, we need do to the following steps:

- Log in to chat.activeloop.ai ⧉

- Navigate to ⚙️ → API tokens, and create a token.

- The newly created API token needs to be updated in the .env file inside the VM located at activeloop-self-hosted-resources/docker-compose/activeloop-neohorizon (you must be the root user), and the container must be restarted.

-

References

Jupyter Hub

A multi-user platform for hosting Jupyter notebooks in a shared environment. A customized solution that provides JupyterLab experience which give user isolation. Recommended for sharing single instance with multiple users for collaboration.

- Collaborative notebooks

- Scalable environment

- User management

- Customizable environments

- Centralized access

- Secure authentication

- Resource sharing

JupyterHub Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Instance Name | Name of the virtual machine | Yes |

| Region | Region where the VM will be deployed | Yes |

| Zone | Zone within the selected region | Yes |

| GPU Type | Type of GPU attached to the VM | Yes |

| GPU Count | Number of GPUs attached to the VM | Yes |

| Machine Type | Type of machine instance | Yes |

| Install Croissant | If checked, it will install Croissant Library | No |

| Boot Disk Image | Image used for the boot disk | Yes |

| Boot Disk Size | Size of the boot disk (in GB) | Yes |

| Boot Disk Type | Type of boot disk (e.g., SSD, HDD) | Yes |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

| Service Account | Service account associated with the VM | No |

Default Configurations

-

Network Tags: The following tags are applied to enable necessary functionality for the JupyterHub service:

- allow-health-checks: Ensures health checks are allowed for monitoring the service.

- allow-iap: Enables Identity-Aware Proxy (IAP) for secure access.

-

Startup Script:

A custom startup script is used to configure JupyterHub with the necessary user settings and URLs. Key parameters include:

-

user_list: List of users with access.

-

user_email: Email of the requesting user. By adding the users in this list, the users will be able to add the users on JupyterHub Notebook.

-

admin_email: Email of the JupyterHub admin. This will give admin level permission for the users on JupyterHub service. Please modify this list responsibly.

Note

Users of the instance will be responsible for managing the user access to the notebooks and are advised to share the notebooks with registered users only.

-

-

Custom Metadata:

-

custom-proxy-url: This metadata key stores the link to the notebook URL hosting the JupyterHub server. This value will be referred to as

CUSTOM_PROXY_URLhenceforth. -

startup-script: This metadata key stores the startup script used for the JupyterHub as described above.

-

install-nvidia-driver: This ensures the installation of nvidia-drivers into the instances if GPUs attached. In case of user facing any issues with the Nvidia drivers, reach out to our support team.

-

-

Proxy URLs:

- Each JupyterHub instances have been configured to host custom services on proxy ports. Ports

8002-8006are enabled for hosting the services. Please ensure that the services hosted are authenticated for better security. - The service loadbalancers will be attached to the cloud armor policies.

- Naming convention of the URL:

$CUSTOM_PROXY_URL/$INSTANCE_NAME/proxy/{8002-8006}where CUSTOM_PROXY_URL which is defined in custom metadata.

- Each JupyterHub instances have been configured to host custom services on proxy ports. Ports

-

Load Balancers:

- Each services are hosted behind a loadbalancer which follows same name as instance/service deployed. You can view it under Load balancing section in Google Cloud Platform.

-

Croissant Library: Croissant is a high-level format for machine learning datasets that combines metadata, resource file descriptions, data structure, and default ML semantics into a single file; it works with existing datasets to make them easier to find, use, and support with tools. It can be installed by enabling the 'Install Croissant' checkbox. It will install inside '/opt/conda/' path. It will install using the startup-script.

Data Security Guidelines:

Our platform is committed to ensuring the security of your data within the project environment. However, it is user responsibility to maintain the confidentiality of your data and prevent it from being shared outside of the project.

- Do not share your Jupyter Notebooks with anyone outside of the project.

- Do not store your data outside of the project resources created. This includes sharing them online, on personal devices, or through any other means.

- Be mindful of the information you include in your notebooks. Avoid storing sensitive data, such as passwords or personal information, directly within the notebooks.

Note

By using this platform, you agree to be responsible for the security of your data and to comply with these guidelines.

If you have any questions or concerns about data security, please contact our support team here ⧉.

Click here for learning tools and tips for working with Notebooks.

References

Jupyter service Overview ⧉ Croissant Library ⧉

MLflow

MLflow is an open-source platform designed to streamline the machine learning lifecycle. This service (often referred to as the MLflow Tracking Server) acts as a central hub for:

- Experiment Tracking: Logging and comparing parameters, metrics, and artifacts from different ML runs.

- Model Management: Registering, versioning, and managing the lifecycle of trained models.

- Artifact Storage: Storing models, data, plots, and other outputs associated with ML experiments. It provides a UI for visualization and APIs for programmatic interaction, helping teams collaborate and reproduce results more effectively.

MLflow Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Cloud Run Name | Name for your deployment of MLflow service. | Yes |

| Region | Region where MLflow service to be deployed. | Yes |

| Instance size | Instance size for metadata server for MLflow. | Yes |

| Version | Version of MLflow service to be deployed. | Yes |

| CPU | CPU allocation for the MLflow service. Defaulted to 1 CPU. | No |

| Memory | Memory allocation for MLflow service. Defaulted to 1 Gi. | No |

| Port | Port number used for MLflow Image Version. Defaulted to 8080. | No |

| Service Account | Service account associated with the service. | No |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

| Authentication Configuration | Set Cloud Run Service's Security | No |

Default Configurations

This MLflow instance is deployed on Google Cloud Platform, providing a robust and scalable platform for your machine learning lifecycle.

- Authentication Configuration: All the authentication configuration of Cloud run service catalogue in Compute Section is applicable over here as well.

Key Components:

- Serverless MLflow Service: The core MLflow service runs on Cloud Run, enabling automatic scaling, high availability, and pay-per-use billing without server management.

- Reliable Artifact Storage: All MLflow artifacts (e.g., models, plots, data) are securely stored in a Cloud Storage (GCS) bucket, ensuring durability and global accessibility.

- Managed Metadata Database: Cloud SQL (PostgreSQL) provides a fully managed, high-performance database for MLflow's metadata, ensuring reliable tracking of experiments, runs, and metrics.

- Accessibility: The MLflow UI and API are accessible via the Cloud Run service URL. This deployment is configured to be internal to this Google Cloud Platform project, enhancing security by limiting external exposure.

References

MIS

The Medical Imaging Suite (MIS) is a comprehensive system for capturing, managing and analyzing medical images to enhance diagnostic accuracy.

- Efficient workflow

- Accurate diagnosis

- Seamless data access

- 3D imaging support

- Remote collaboration

- EHR integration

MIS Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Instance Name | Name of the MIS instance | Yes |

| Region | Region where the instance is located | Yes |

| Zone | Zone within the selected region | Yes |

| GPU Type | Type of GPU attached to the instance | Yes |

| GPU Count | Number of GPUs attached | Yes |

| Machine Type | Type of machine instance | Yes |

| Install Croissant | If checked, it will install Croissant Library | No |

| Boot Disk Image | Image used for the boot disk | Yes |

| Boot Disk Size | Size of the boot disk (in GB) | Yes |

| Boot Disk Type | Type of boot disk (e.g., SSD, HDD) | Yes |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

| Service Account | Service account associated with the instance | No |

As MIS is a version of JupyterHub, all the configurations of JupyterHub service is applicable for MIS.

Default Configurations

-

Tags:

The following tags are applied to enable necessary functionality for the JupyterHub service:

- allow-health-checks: Ensures health checks are allowed for monitoring the service.

- allow-iap: Enables Identity-Aware Proxy (IAP) for secure access.

-

Startup Script:

A custom startup script is used to configure JupyterHub with the necessary user settings and URLs. Key parameters include: - user_list: List of users with access.

-

user_email: Email of the requesting user. By adding the users in this list, the users will be able to add the users on JupyterHub Notebook.

-

admin_email: Email of the JupyterHub admin. This will give admin level permission for the users on JupyterHub service. Please modify this list responsibly.

Note

Users of the instance will be responsible for managing the user access to the notebooks and are advised to share the notebooks with registered users only.

-

-

Custom Metadata:

-

custom-proxy-url: This metadata key stores the link to the notebook URL hosting the JupyterHub server.

-

startup-script: This metadata key stores the startup script used for the JupyterHub as described above.

-

install-nvidia-driver: This ensures the installation of nvidia-drivers into the instances if GPUs attached. In case of user facing any issues with the Nvidia drivers, reach out to our support team via feedback tool.

-

-

Croissant Library: Croissant is a high-level format for machine learning datasets that combines metadata, resource file descriptions, data structure, and default ML semantics into a single file; it works with existing datasets to make them easier to find, use, and support with tools. It can be installed by enabling the 'Install Croissant' checkbox. It will install inside '/opt/conda/' path. It will install using the startup-script.

References

Medical Imaging Suite ⧉ Croissant Library ⧉

Vertex AI Workbench

This is a JupyterLab on a pre-configured virtual machine located inside Vertex AI. Ideal to get started within a few minutes right from the browser. Vertex AI Workbench is a tool provided by Google Cloud for developers and data scientists working to build machine learning models. It’s based on Jupyter notebooks, which are interactive documents that allow you to write code, run it and see the results, all in one place. Think of it as a workspace for data scientists and engineers to do their job easily. You can use Vertex AI Workbench to access various Google Cloud services, making it easier to build and deploy machine learning models without leaving the notebook environment.

- Scalable

- ML workflow automation

- Custom environments

Vertex AI Workbench Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| VM Name | Name of the Vertex AI Workbench virtual machine | Yes |

| Region | Region where the Vertex AI WorkbenchVM is located | Yes |

| Zone | Zone within the selected region | Yes |

| GPU Type | Type of Vertex AI Workbench GPU | Yes |

| GPU Count | Count of GPU | Yes |

| Machine Type | Type of Vertex AI Workbench machine | Yes |

| Install Croissant | If checked, it will install Croissant Library | No |

| Boot Disk Size | Size of the boot disk | Yes |

| Boot Disk Type | Type of the boot disk | Yes |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

| Service Account | Service account associated with the Vertex AI Workbench VM | No |

Default Configurations

-

Enable Required APIs:

The necessary APIs (notebooks.googleapis.com,aiplatform.googleapis.com) for Vertex AI Workbench will be enabled to ensure smooth operation and functionality of the service. -

Network and IP Settings:

a. Disable Public IP: All the notebooks created by catalogues ensures that public IP access is disabled (disable public ip = true), so the instance will only be accessible through private networking for enhanced security.

b. Enable IP Forwarding: IP forwarding will be enabled (enable ip forwarding = true), allowing the instance to forward packets to other destinations, which can be useful for certain networking scenarios.

c. Proxy Access: Proxy access will remain enabled (disable proxy access = false), meaning that proxy services can still be used for accessing the instance if needed. -

Network Tags:

The following tags will be assigned to the instance to help with identification, access control, and categorization:

a. allow-iap: This tag allows access via Identity-Aware Proxy (IAP), which adds an extra layer of security for SSH access.

b. deeplearning-vm: This tag indicates that the instance is configured for deep learning workloads.

c. notebook-instance: This tag designates the instance as a notebook for development and experimentation. -

Croissant Library:

Croissant is a high-level format for machine learning datasets that combines metadata, resource file descriptions, data structure, and default ML semantics into a single file; it works with existing datasets to make them easier to find, use, and support with tools. It can be installed by enabling the 'Install Croissant' checkbox. It will install inside '/opt/conda/' path. It will install using the startup-script.

Click here to learn tools and tips for working with Notebooks.

References

SSH into Vertex AI Workbenches ⧉

3D Slicer

The is a swiss army knife of medical imaging research. It can load, visualize and analyze various file formats and data modalities. Further, it is continuously developed by the research community. The extension manager contains more than 150 extensions. 3D Slicer is an open-source software platform for medical image informatics, image processing and three-dimensional visualization. In Google Cloud Platform, it can be deployed as part of custom computing or visualization solutions to support medical and scientific research. Google Cloud Platform provides the infrastructure to host and process 3D Slicer workloads.

- Medical imaging

- 3D visualization

- Open-source

- Multi-modality support

- Advanced analytics

- Interactive tools

- Extensible modules

3D Slicer Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| VM Name | Name of the virtual machine | Yes |

| Region | Region where the VM will be deployed | Yes |

| Zone | Zone within the selected region | Yes |

| GPU Type | Type of GPU attached to the VM | Yes |

| GPU Count | Number of GPUs attached to the VM | Yes |

| Machine Type | Type of machine instance | Yes |

| Disk Name | Name of the VM disk | Yes |

| Boot Disk Image | Image used for the boot disk | Yes |

| Boot Disk Size | Size of the boot disk (in GB) | Yes |

| Boot Disk Type | Type of boot disk (e.g., SSD, HDD) | Yes |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

| Service Account | Service account associated with the VM | No |

Access 3D Slicer

3D Slicer service is deployed as a Desktop service. To access the application, we need to RDP into the Windows Desktop on Google Cloud Platform and use the application. This can be accessed using IAP Desktop as mentioned here.

Default Configurations

-

Mount Folder:

- mount_folder: The folder where the service's data will be mounted is specified. By default, this is set to

D:/, but it can be customized based on your requirements. By default, the default bucket created per project is mounted toD:\datafolder. Read how to mount GCS buckets to Windows Instances here. - KMS Key for Disk Encryption: The service will support encryption using a Key Management Service (KMS) key. The KMS key’s self-link can be specified for secure data encryption (

kms_key_self_link). If no key is provided, a default configuration will be used.

- mount_folder: The folder where the service's data will be mounted is specified. By default, this is set to

-

Tags:

- allow-iap: This tag enables Identity-Aware Proxy (IAP), providing secure access to the 3D Slicer service, allowing only authorized users to connect.

-

Data Disk:

- By default, additional disk is attached to the 3D slicer machine and mounted at "D:/". The data disk is used to store annotation data and bucket mounting.

References

Data Services MONAI

MONAI Service is a comprehensive medical imaging analysis platform that leverages the MONAI (Medical Open Network for AI) framework to streamline your end-to-end workflow for medical image processing, model training, and deployment. The service provides an unified interface for data ingestion, model training, inference, and results management with seamless integration capabilities for both research and clinical environments.

Data Services MONAI Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Resource Name | Random String which we are using as suffix to add in every resource | No |

| Region | Region where the VM will be deployed | Yes |

| Zone | Zone within the selected region | Yes |

| GPU Required | GPU is required or not | Yes |

Default Configurations

-

Virtual Machine: It is used for hosting MONAI server for running different annotation models. Depending on the usecase, GPU can be attached.

Note

GPU depends on the availability of the GPU in a particular region as well as GPU quotas at that particular time. It may happen that even after we attach GPU on the API, GPU may not be attached due to quota or availability issue on Google Cloud Platform end. A preference had been set in case if a GPU is not available. Below is the preference from highest to lowest:

- Nvidia Tesla V100

- Nvidia Tesla P100

- Nvidia Tesla T4

- Nvidia Tesla P4 -

Cloud Run: There are 3 cloud run which are getting used. Below are the APIs:

- Data Service API: To download data from different endpoints.

- Orthanc API: Used for storing open-source images like Dicom images.

- Ohif API: Hosts UI and is integrated with Data Service API and Orthanc API.

-

GCS Buckets: There are 2 buckets getting used. Below are the Buckets:

- Validated Bucket: It is mounted with Orthanc Server.

- Landing Bucket: It is used by Orthanc Cloud function.

Buckets have all the security features enabled.

-

Cloud Function: It is a pub-sub trigger Cloud function named as 'Orthanc Upload CF'. It gets triggered when we upload an image in landing bucket and in turn the image gets uploaded in Orthanc Server.

-

Load Balancer: Each services are hosted behind a loadbalancer which follows same suffix name as instance/service deployed. You can view the load balancers in Google Cloud Platform console.

Note

After, the setup is created. It takes an additional 5-10 minutes for the service to be up.

References

Deployable Package Data Services

The Deployable Data Services Package is a complete cloud-based solution designed to handle medical imaging and other data files efficiently. This package takes raw data files like DICOM medical images and WSI (Whole Slide Imaging) files and transforms them into organized, useful information that can be easily analyzed and visualized. It helps to collectively de-identify, transform, and convert raw DICOM, WSI, and other files into useful, structured data.

Deployable Package Data Services Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Resource Name | Random String which we are using as suffix to add in every resource | No |

| Region | Region where the VM will be deployed | Yes |

| Zone | Zone within the selected region | Yes |

Default Configurations

-

Cloud Functions: There are 10 Cloud Functions used and few common cloud functions are created for each layer. Below are the following cloud functions:

- Data Import Batch Update: It updates the batch status once the STS job is completed in artifact scanner project.

- Data Import Preprocessing Dataflow: It triggers the Data Import Preprocessing Dataflow template.

- Data Import Recurring Trigger: It is used for triggering data transfer endpoint on the basis of scheduler requests.

- Data Processing Dataflow: It triggers Data Processing Dataflow template.

- Data Processing File Loggers: It gets triggered when an upload event happens on 'Landing Bucket' and insert raw file path record for specific ingestion in ingestion file logger table.

- Metadata Dataflow Trigger: It triggers Metadata Dataflow Template.

- Metadata File Logger: It gets triggered when an upload event happens on 'Metadata Bucket' and insert metadata file path record for specific ingestion in metadata file logger table.

- Metadata Push Tags: It helps in pushing WSI tags.

- Asset Statistics Update: It helps in updating asset Statistics basis on the source and connection name.

- Data Cleanup CF: It helps in regular cleaning of sql record from ingestion file logger table and metadata file logger table.

-

Cloud Dataflow: There are 4 Cloud Dataflow pipelines used for ETL Jobs. Below are the following Cloud Dataflows:

- Data import Preprocessing template: For zipped files, it first extracts (or unzips) the file and upload the extracted files into the 'Landing Bucket'.

- Data processing template: It performs deidentification of data on the extracted files present in the 'Landing Bucket' as per the given rule sheet. It also requires validation. After all the steps, it pushes its changes to 'Validated Bucket' and to 'Metadata Bucket' (in raw directory).

- Metadata template: It ingests data into ElasticSearch.

- Data transfer template: It basically transfers the data from the 'Validated Bucket' to the desired bucket as mentioned by the user.

-

SQL: It mostly uses PostgreSQL as a database engine. It is mainly used for logging purposes as well as for monitoring the flow of pipelines.

-

GKE: It uses a Standard GKE Cluster to host the following services:

- Data Service API: It is a backend for ingestion and data processing workflows as well as it also has REST endpoints for tenant-specific queries (e.g., WSI, DICOM ingestion).

- Data Service UI: It is a front-end for tenant users to interact with data workflows.

- Elasticsearch (ES): It is used for dynamic data indexing and retrieval as well as for holding metadata information for DICOM and WSI Data.

-

GCS Buckets: There are 4 GCS Buckets getting used:

- Landing Bucket

- Validated Bucket

- Metadata Bucket

- Intermediate Bucket Buckets have all the security features enabled.

-

Pub/Sub: 2 Pub/Sub topics are used to trigger the Cloud Dataflow pipelines on an upload event on the 'Landing Bucket' and the 'Metadata Bucket'.

-

Scheduler: It uses 4 schedulers to trigger different Cloud Functions. Below are the following schedulers name:

- Data Processing: Runs every 6 hours and triggers Data Processing Dataflow Cloud Function.

- Metadata Processing: Runs every 6 hours and triggers Metadata Dataflow Trigger Cloud Function.

- Data Cleanup: Runs on daily basis and triggers Data Cleanup CF Cloud Function.

- Asset Statistics: Runs every 6 hours and triggers Asset Statistics Update Cloud Function.

References

Storage

Artifact Registry

Repository for managing and storing container images. Container images greatly increase reproducibility and security in AI development. They are essential for many cloud-based services. Google Cloud's Artifact Registry is a fully managed service that allows you to store, manage and secure your build artifacts, such as container images and dependencies like libraries, packages and binaries. It supports a variety of formats like Docker, Maven and npm and is designed to scale efficiently while ensuring security and reliability. It is a key part of the DevOps lifecycle, helping teams organize and manage artifacts generated during the build process.

- Centralized storage

- Multi-format support

- Secure access

- Version tracking

- Seamless integration

- Dependency management

- Scalable repository

Artifact Registry Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Artifact Registry Name | Name of the Artifact Registry | Yes |

| Format | Format of the artifacts (e.g., Docker) | Yes |

| Location | Region where the Artifact Registry is located | Yes |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

| Cleanup Policy | Policy for cleaning up old or unused artifacts | No |

Default Configurations

-

Enable APIs:

- The Artifact Registry API (

artifactregistry.googleapis.com) is enabled, allowing the service to manage and store container images, libraries, and artifacts effectively. This API is essential for the Artifact Registry to operate within your Google Cloud environment.

- The Artifact Registry API (

-

Cleanup Policy:

- Cleanup Policy Dry Run: By default, the cleanup policy is set to

false, meaning the cleanup pipeline will actively remove old or unused versions of artifacts in the repository to manage storage efficiently. However, if set totrue, the cleanup will run in "dry run" mode, preventing actual deletion of versions and allowing you to review what would be cleaned up before making any changes.

- Cleanup Policy Dry Run: By default, the cleanup policy is set to

References

Grant Repo specific Permissions ⧉

Cloud Storage

A service that allows data to be stored and accessed remotely over the internet. Cloud Storage allows world-wide storage and retrieval of any amount of data at any time. You can use Cloud Storage for a range of scenarios including serving website content, storing data for archival and disaster recovery.

- Infinite storage volume

- Remote access

- Data backup

- Secure encryption

- Cost-efficient

- Sync across devices

- Collaboration support

Cloud Storage Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Bucket Name | Name of the Cloud Storage bucket | Yes |

| Region | Region where the storage bucket will be located | Yes |

| Storage Class | Type of storage class (e.g., STANDARD, NEARLINE, COLDLINE, ARCHIVE) | Yes |

| GCS Lifecycle | Lifecycle management policies for the bucket | Yes |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

Bucket Name

Bucket names are globally unique and once created cannot be updated. We follow ${USER_DEFINED_SERVICE_NAME}-${RANDOM_ID}naming convention for all the buckets created from Service Catalogues. Click here ⧉ for more details.

Region

Currently service catalogues support provisioning of regional buckets which are optimal with respect to regional workloads. In case the use case demands to have multi-regional buckets to be created, the user will have access to the Google Cloud Storage console for creating the buckets.

Note

To understand what suits better for your usecase please refer location considerations ⧉ and location recommendation ⧉

Storage Class

You can select one of the below storage class while provisioning the buckets:

| Storage Class | Monthly Availability | Minimum Storage Duration | Retrieval Fees | Best For? |

|---|---|---|---|---|

| STANDARD | 99.99% in regions | None | None | Frequently accessed ("hot" data) |

| NEARLINE | 99.9% in regions | 30 days | Yes | Highly durable storage service for storing infrequently accessed data |

| COLDLINE | 99.9% in regions | 90 days | Yes | Highly durable storage service for infrequently accessed data with slightly lower availability |

| ARCHIVE | 99.9% in regions | 365 days | Yes | Highly durable storage service for data archiving, online backup, and disaster recovery |

Click here ⧉ for more information.

Default Lifecycle policy

The Lifecycle Policy can be applied during creation of the buckets. By default the following policy is applied to the buckets created from Service Catalogues.

| Action | Object condition | __ |

|---|---|---|

| Set to Nearline | 30+ days since object was created Storage Class matches Standard | |

| Set to Coldline | 90+ days since object was created Storage Class matches Nearline | |

| Set to Archive | 365+ days since object was created Storage Class matches Coldline | |

| Delete object | 730+ days since object was created Storage Class matches Archive |

Note

The above is added as standard policy to ensure data lifecycle optimization. Users have access to update the policies according to their requirement. Refer here ⧉ for learning more about lifecycle policies.

Customer-managed encryption key (CMEK)

Cloud Storage always encrypts your data on the server side, before it is written to disk, at no additional charge. By default Google Managed Encryption is used. To increase data security we recommend to use Customer managed encryption keys for data sensitive workloads as server side encryption.

Note

Client-side encryption: encryption that occurs before data is sent to Cloud Storage. Such data arrives at Cloud Storage already encrypted but also undergoes server-side encryption.

Default Configurations

-

Bucket Settings:

-

Versioning Enabled: This feature will be enabled to keep a history of all object versions in the bucket. It allows you to recover objects that are overwritten or deleted, adding an extra layer of data protection.

-

Uniform Bucket-Level Access: We will enforce uniform bucket-level access (

uniform_bucket_level_access = true), meaning all access control will be managed at the bucket level rather than at the individual object level. This simplifies access management and improves security.

-

References

Discover Object Storage Gcloud Commands ⧉

Workflows

Cloud Composer

Workflow orchestration service built on Apache Airflow for scheduling and managing chains of jobs/functionalities. Cloud Composer is a fully managed workflow orchestration service built on Apache Airflow. It allows you to create, schedule, monitor and manage workflows, simplifying the automation of tasks across cloud services and on-premises systems. Composer integrates seamlessly with other Google Cloud services and provides automation for environment setup, letting you focus on your workflows rather than infrastructure management.

- Workflow automation

- Apache Airflow

- Scalable scheduling

- Integrated monitoring

- Dependency management

- Custom workflows

- Easy scaling

Cloud Composer Parameters

| Parameter | Description | Mandatory |

|---|---|---|

| Cloud Composer Cluster Name | Name of the Cloud Composer cluster | Yes |

| Region | Region where the Cloud Composer cluster is located | Yes |

| Resilience Mode | Mode of resilience for the Cloud Composer | Yes |

| Use Latest Version | Selects the latest version if enabled | No |

| Selected Image Version | Lists all compatible versions in selected region | Yes |

| Composer Major Version | Select desired Cloud Composer generation (if Use Latest Version is enabled) | Yes |

| IP Range Pods (Optional) | IP range for the pods in the environment | No |

| IP Range Services (Optional) | IP range for the services in the environment | No |

| Environment Size | Size of the environment (e.g., small, medium, large) | Yes |

| Service Account | Service account associated with the Cloud Composer | No |

| Customer-managed encryption key (CMEK) | Encryption key managed by the customer | No |

Default Configurations

-

IAM roles for Cloud Composer:

- Composer Worker Role: This assigns the roles/composer.worker role to a service account, allowing it to perform tasks required for the Cloud Composer environment.

-

Cloud Composer Generation:

- Cloud Composer 2: Cloud Composer 2 introduces a re-architected control plane and data plane, enabling auto-scaling of Airflow workers and reducing infrastructure overhead through a more efficient resource management model. It leverages GKE Autopilot for underlying infrastructure, simplifying cluster operations.

- Cloud Composer 3: Cloud Composer 3 builds upon this foundation by further decoupling Airflow components and optimizing resource allocation, allowing for more granular scaling and improved isolation of workloads. It enhances integration with Google Cloud services through native connectors and optimized data transfer mechanisms. It is recommended to use Cloud Composer 3 workflows.

-

Workload configurations: The environment's workload configurations vary based on the size of the environment (small, medium, large). These configurations dictate the amount of CPU, memory, and storage allocated for different components like:

- Scheduler: Manages the orchestration of tasks.

- Web Server: Provides the UI for managing workflows.

- Worker: Executes the actual tasks.

- Triggerer: Handles external triggers for workflows. These configurations ensure that your environment is optimized based on your operational needs.

-

Private environment:

- Private service connect: This ensures the environment uses a private connection type for enhanced security by enabling private endpoints and connecting through a private subnetwork. It is applicable for Cloud Composer 2.

- For Cloud Composer 3, Private environments require no configuration.

-

Dynamic workloads: The Composer environment dynamically adjusts its resources based on workload size using the configurations provided. This makes it flexible to scale with your operational requirements.

-

Node configuration: The environment nodes are configured with custom networking, including private IP allocation, subnetwork configuration, and optional encryption settings using a KMS key.

-

Resilience mode: The Composer environment can be set to different resilience levels to ensure high availability and fault tolerance, enhancing the reliability of your workflows.

References

Cloud Composer Overview ⧉

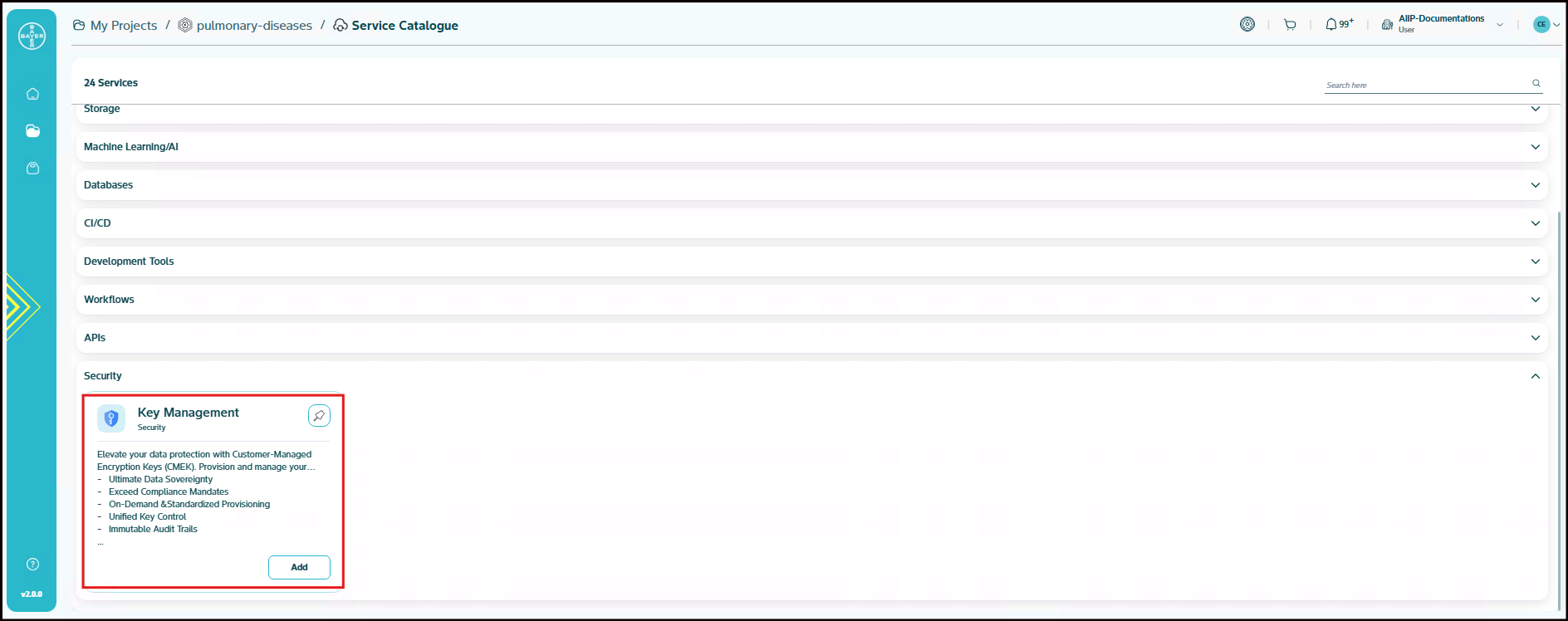

Security

Key Management